https://flink.apache.org/

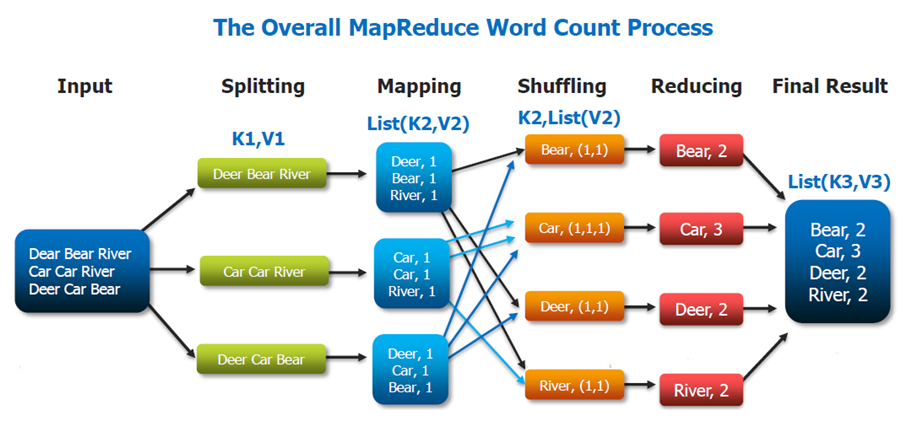

Is Flink a Hadoop Project? Flink is a data processing system and an alternative to Hadoop’s MapReduce component. It comes with its own runtime rather than building on top of MapReduce. As such, it can work completely independently of the Hadoop ecosystem. However, Flink can also access Hadoop’s distributed file system (HDFS) to read and write data, and Hadoop’s next-generation resource manager (YARN) to provision cluster resources. Since most Flink users are using Hadoop HDFS to store their data, Flink already ships the required libraries to access HDFS.

Do I have to install Apache Hadoop to use Flink? No. Flink can run without a Hadoop installation. However, a very common setup is to use Flink to analyze data stored in the Hadoop Distributed File System (HDFS). To make these setups work out of the box, Flink bundles the Hadoop client libraries by default.

Additionally, we provide a special YARN Enabled download of Flink for users with an existing Hadoop YARN cluster. Apache Hadoop YARN is Hadoop’s cluster resource manager that allows use of different execution engines next to each other on a cluster.

An Alternative to Hadoop MapReduce? Apache Flink is considered an alternative to Hadoop MapReduce. Flink offers cyclic data, a flow which is missing in MapReduce. Flink offers APIs, which are easier to implement compared to MapReduce APIs. It supports in-memory processing, which is much faster. Flink is also capable of working with other file systems along with HDFS. Flink can analyze real-time stream data along with graph processing and using machine learning algorithms. It also extends the MapReduce model with new operators like join, cross and union. Flink offers lower latency, exactly one processing guarantee, and higher throughput. Flink is also considered as an alternative to Spark and Storm. (To learn more about Spark, see How Apache Spark Helps Rapid Application Development.)

Flink可以完全独立于Hadoop,在不依赖Hadoop组件下运行。但是做为大数据的基础设施,Hadoop体系是任何大数据框架都绕不过去的。Flink可以集成众多Hadooop 组件,例如Yarn、Hbase、HDFS等等。例如,Flink可以和Yarn集成做资源调度,也可以读写HDFS,或者利用HDFS做检查点

# 1. Intro

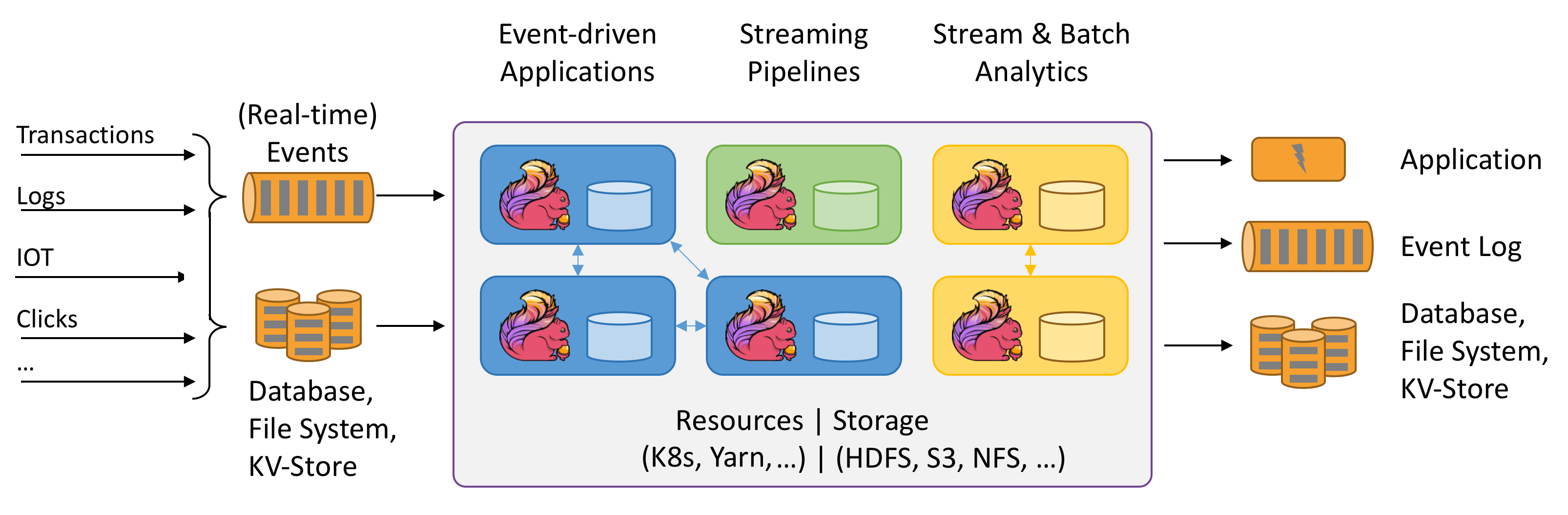

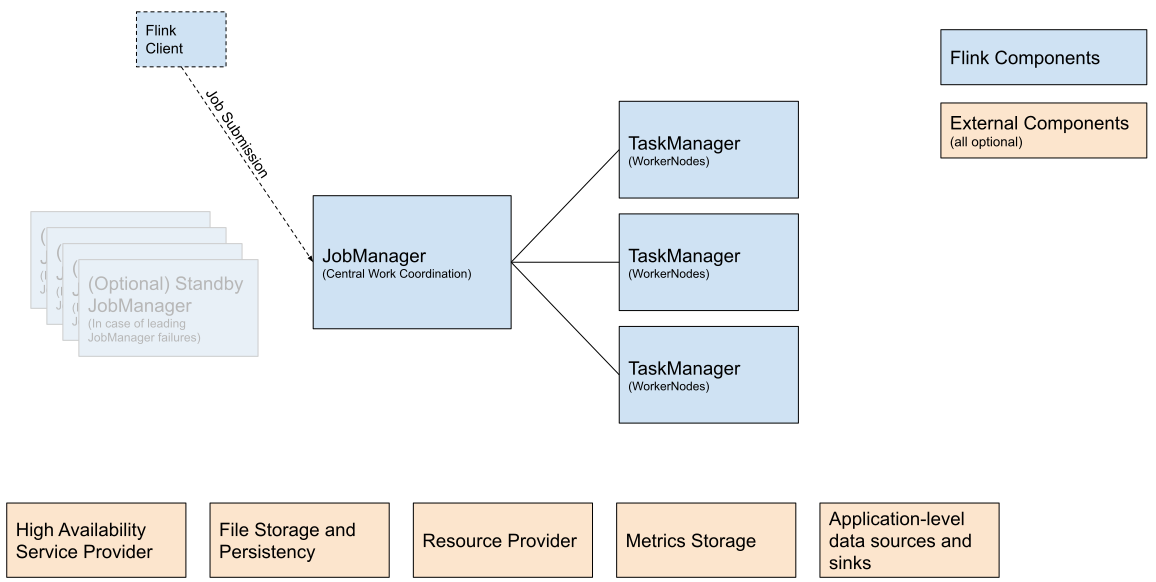

# 1.1 Architecture

Flink is a distributed system and requires effective allocation and management of compute resources in order to execute streaming applications. It integrates with all common cluster resource managers such as Hadoop YARN and Kubernetes, but can also be set up to run as a standalone cluster or even as a library.

The Client is not part of the runtime and program execution, but is used to prepare and send a dataflow to the JobManager. After that, the client can disconnect (detached mode), or stay connected to receive progress reports (attached mode). The client runs either as part of the Java/Scala program that triggers the execution, or in the command line process ./bin/flink run ....

Flink 集群是由 JobManager(JM)、TaskManager(TM)两大组件组成的,每个 JM/TM 都是运行在一个独立的 JVM 进程中。JM 相当于 Master,是集群的管理节点,TM 相当于 Worker,是集群的工作节点,每个 TM 最少持有 1 个 Slot,Slot 是 Flink 执行 Job 时的最小资源分配单位,在 Slot 中运行着具体的 Task 任务。

- Flink Memory Model

Set up Flink’s Process Memory (opens new window) Total Process Memory(The total process memory of Flink JVM processes) = JVM Off heap Memory to run the flink application(means the job manager)[=JVM Metaspace+JVM Overhead] + Total Flink Memory(consumed by the flink application)[=JVM heap + Off heap Memory(direct/native memory)]

Set up TaskManager Memory (opens new window) the TaskManager memory components have a similar but more sophisticated structure compared to the memory model of the JobManager process.

Total Process Memory(configed e.g taskmanager.memory.process.size:16G) = JVM Specific Memory to run the flink application(means the taskmanager) + Total Flink Memory(consumed by the flink application) JVM Specific Memory= JVM Metaspace(taskmanager.memory.jvm-metaspace.size:256M) + JVM Overhead(taskmanager.memory.jvm-overhead.fraction,taskmanager.memory.jvm-overhead.max) JVM Overhead= 0.1*totalProcessMemory=1.6G>1G=1G Total Flink Memory= JVM Heap{=Framework Heap(taskmanager.memory.framework.heap.size)+Task Heap} + Off-Heap Memory Total Flink Memory=Total Process Memory-JVM Metaspace - JVM Overhead=16G-256M-1G=14.75G Off-Heap Memory= Managed Memory(taskmanager.memory.managed.fraction,taskmanager.memory.managed.size) + Direct Memory The following workloads use managed memory: a) Streaming jobs can use it for RocksDB state backend. b) Both streaming and batch jobs can use it for sorting, hash tables, caching of intermediate results. c) Both streaming and batch jobs can use it for executing User Defined Functions in Python processes Direct Memory= Framework Off-heap(taskmanager.memory.framework.off-heap.size) + Task Off-heap(taskmanager.memory.task.off-heap.size) + Network(taskmanager.memory.network.fraction,taskmanager.memory.network.max) Managed Memory=0.4*totalFlinkMemory=0.4*14.75=5.9G Network Memory=0.1*totalFlinkMemory=0.1*14.75=1.475G>1G=1G Direct Memory=128M+0bytes+1G=1.125G Off-Heap Memory=5.9G+1.125G=7.025G JVM Heap(Framework Heap+Task Heap)=Total Flink Memory-Off-Heap Memory=14.75G-7.025G=7.725G=7.73G Task Heap=JVM Heap-Framework Heap=7.725G-128M=7.6G

Note: If you want to guarantee that a certain amount of JVM Heap is available for your user code, you can set the task heap memory explicitly (taskmanager.memory.task.heap.size). It will be added to the JVM Heap size and will be dedicated to Flink’s operators running the user code. specify explicitly both task heap and managed memory. It gives more control over the available JVM Heap to Flink’s tasks and its managed memory.

# 1.1.1 JobManager

The JobManager has a number of responsibilities related to coordinating the distributed execution of Flink Applications: it decides when to schedule the next task (or set of tasks), reacts to finished tasks or execution failures, coordinates checkpoints, and coordinates recovery on failures, among others. This process consists of three different components:

# ResourceManager

is responsible for resource de-/allocation and provisioning in a Flink cluster — it manages task slots, which are the unit of resource scheduling in a Flink cluster (see TaskManagers). Flink implements multiple ResourceManagers for different environments and resource providers such as YARN, Kubernetes and standalone deployments. In a standalone setup, the ResourceManager can only distribute the slots of available TaskManagers and cannot start new TaskManagers on its own.

# Dispatcher

provides a REST interface to submit Flink applications for execution and starts a new JobMaster for each submitted job. It also runs the Flink WebUI to provide information about job executions.

# JobMaster

is responsible for managing the execution of a single JobGraph. Multiple jobs can run simultaneously in a Flink cluster, each having its own JobMaster.

# 1.1.2 TaskManagers

# Flink Job

A Flink Job is the runtime representation of a logical graph (also often called dataflow graph) that is created and submitted by calling execute() in a Flink Application.

一个Job代表一个可以独立提交给Flink执行的作业,我们向JobManager提交任务的时候就是以Job为单位的,只不过一份代码里可以包含多个Job(每个Job对应一个类的main函数)

Example:

# JobGraph / Logical Graph

A logical graph is a directed graph where the nodes are Operators and the edges define input/output-relationships of the operators and correspond to data streams or data sets. A logical graph is created by submitting jobs from a Flink Application. Logical graphs are also often referred to as dataflow graphs.

# ExecutionGraph/Physical Graph

A physical graph is the result of translating a Logical Graph for execution in a distributed runtime. The nodes are Tasks and the edges indicate input/output-relationships or partitions of data streams or data sets.

# TM: Task Manager

- is a JVM process, (also called workers) execute the tasks of a dataflow, and buffer and exchange the data streams. There must always be at least one TaskManager. Each worker (TaskManager) is a JVM process, and may execute one or more subtasks in separate threads. To control how many tasks a TaskManager accepts, it has so called task slots (at least one).

# TS: Task Slot

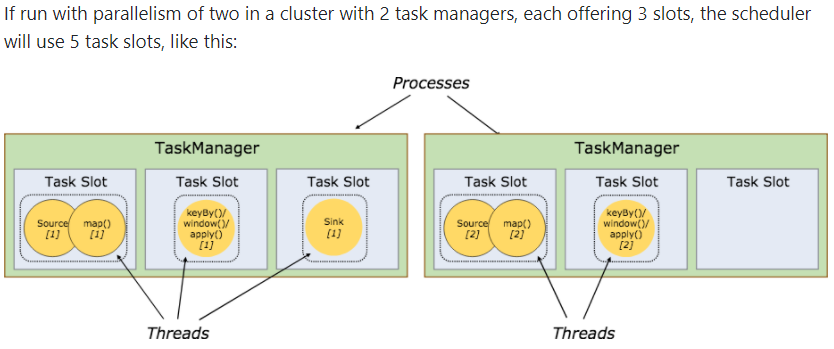

- each TS represents a fixed subset of resources of the TaskManager (No CPU isolation happens between the slots, just the managed memory is divided.) The smallest unit of resource scheduling in a TaskManager is a task slot. The number of task slots in a TaskManager indicates the number of concurrent processing tasks. Note that multiple operators may execute in a task slot

One Slot is not one thread. One slot can have multiple threads. A Task can have multiple parallel instances which are called Sub-tasks. Each sub-task is ran in a separate thread. Multiple sub-tasks from different tasks can come together and share a slot. This group of sub-tasks is called a slot-sharing group. Please note that two sub-tasks of the same task (parallel instances of the same task) can not share a slot together. https://stackoverflow.com/questions/61791811/how-to-understand-slot-and-task-in-apache-flink

Each task slot represents a fixed subset of resources of the TaskManager. A TaskManager with three slots, for example, will dedicate 1/3 of its managed memory to each slot. Slotting the resources means that a subtask will not compete with subtasks from other jobs for managed memory, but instead has a certain amount of reserved managed memory. Note that no CPU isolation happens here; currently slots only separate the managed memory of tasks.

By adjusting the number of task slots, users can define how subtasks are isolated from each other. Having one slot per TaskManager means that each task group runs in a separate JVM (which can be started in a separate container, for example). Having multiple slots means more subtasks share the same JVM. Tasks in the same JVM share TCP connections (via multiplexing) and heartbeat messages. They may also share data sets and data structures, thus reducing the per-task overhead.

# Task

- Node of a Physical Graph.

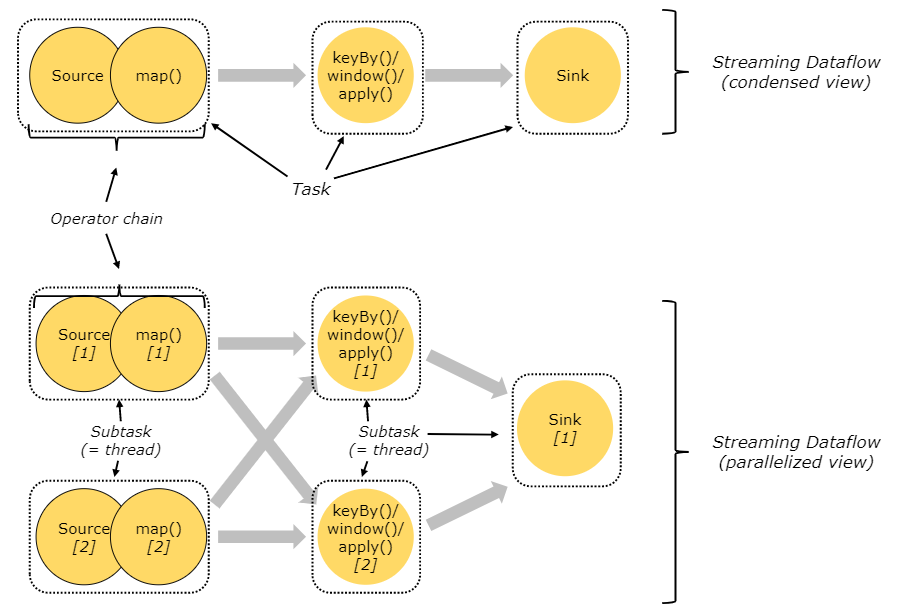

A task is the basic unit of work, which is executed by Flink’s runtime. Tasks encapsulate exactly one parallel instance of an Operator or Operator Chain. For distributed execution, Flink chains operator subtasks together into tasks.

Task是逻辑概念,一个Operator就代表一个Task(多个Operator被chain之后产生的新Operator算一个Operator), 真正运行的时候,Task会按照并行度分成多个Subtask,Subtask是执行/调度的基本单元,每个Subtask需要一个线程(Thread)来执行。

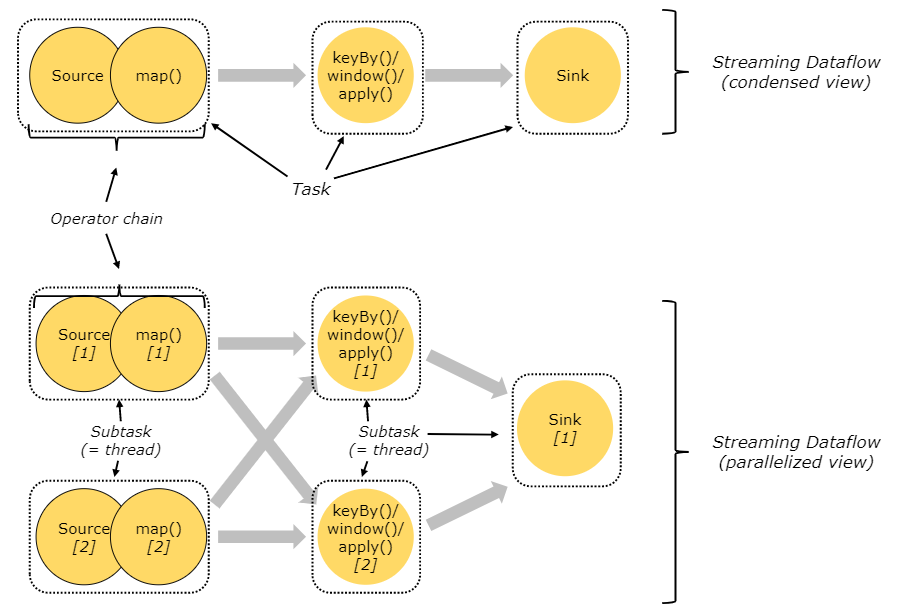

A Sub-Task is a Task responsible for processing a partition of the data stream. The term “Sub-Task” emphasizes that there are multiple parallel Tasks for the same Operator or Operator Chain. Each subtask is executed by one thread.

A task is an abstraction representing a chain of operators that could be executed in a single thread. Something like a keyBy (which causes a network shuffle to partition the stream by some key) or a change in the parallelism of the pipeline will break the chaining and force operators into separate tasks. In the diagram above, the application has three tasks.

举例:source.map().filter().sink() 如果parallel=1,会看到只有一个subtask 跑在一个slot里面,所有操作都是链接在里面,如果想打破chain,可以使用startNewChain,disableChaining 或者采用分区策略,让上下游的并行度不同

A subtask is one parallel slice of a task. This is the schedulable, runable unit of execution. In the diagram above, the application is to be run with a parallelism of two for the source/map and keyBy/Window/apply tasks, and a parallelism of one for the sink -- resulting in a total of 5 subtasks.

A job is a running instance of an application. Clients submit jobs to the jobmanager, which slices them into subtasks and schedules those subtasks for execution by the taskmanagers.

Update:

The community decided to re-align the definitions of task and sub-task to match how these terms are used in the code -- which means that task and sub-task now mean the same thing: exactly one parallel instance of an operator or operator chain. -- https://stackoverflow.com/questions/53610342/difference-between-job-task-and-subtask-in-flink

Note: TaskSlot = Thread only (!) if slot sharing is disabled. It is an optimization that is on by default and in most cases, you would want to keep it that way. It is more precise to say that an Operator Chain = a Thread. Chaining operators together into tasks is a useful optimization: it reduces the overhead of thread-to-thread handover and buffering, and increases overall throughput while decreasing latency.

By default, Flink allows subtasks to share slots even if they are subtasks of different tasks, so long as they are from the same job. The result is that one slot may hold an entire pipeline of the job. Allowing this slot sharing has two main benefits:

# parallelism

A Flink cluster needs exactly as many task slots as the highest parallelism used in the job. No need to calculate how many tasks (with varying parallelism) a program contains in total. slot的个数不能多于cpu-cores

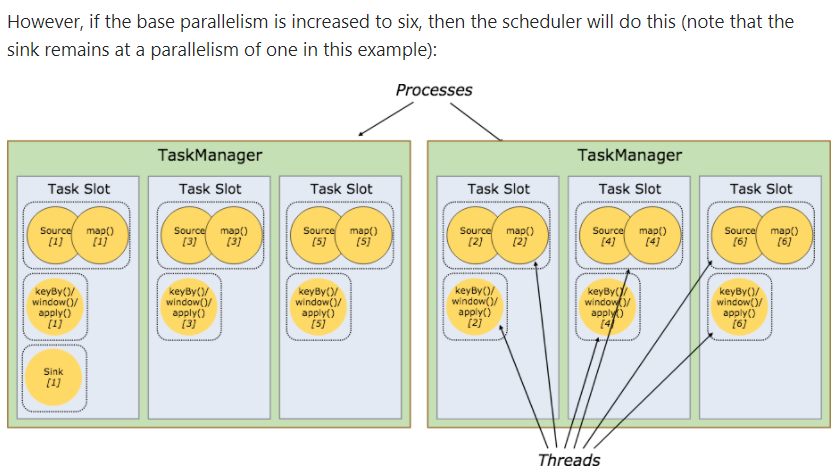

It is easier to get better resource utilization. Without slot sharing, the non-intensive source/map() subtasks would block as many resources as the resource intensive window subtasks. With slot sharing, increasing the base parallelism in our example from two to six yields full utilization of the slotted resources, while making sure that the heavy subtasks are fairly distributed among the TaskManagers.

Operator Level / Execution Environment Level / Client Level / System Level (opens new window)

- Operator Level

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); DataStream<String> text = [...] DataStream<Tuple2<String, Integer>> wordCounts = text .flatMap(new LineSplitter()) .keyBy(0) .timeWindow(Time.seconds(5)) .sum(1).setParallelism(5); wordCounts.print(); env.execute("Word Count Example"); operators、data sources、data sinks都可以调用setParallelism()方法来设置parallelism - Execution Environment Level

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(3); DataStream<String> text = [...] DataStream<Tuple2<String, Integer>> wordCounts = [...] wordCounts.print(); env.execute("Word Count Example"); 在ExecutionEnvironment里头可以通过setParallelism来给operators、data sources、data sinks设置默认的parallelism;如果operators、data sources、data sinks自己有设置parallelism则会覆盖ExecutionEnvironment设置的parallelism - Client Level

./bin/flink run -p 10 ../examples/*WordCount-java*.jar 或者 try { PackagedProgram program = new PackagedProgram(file, args); InetSocketAddress jobManagerAddress = RemoteExecutor.getInetFromHostport("localhost:6123"); Configuration config = new Configuration(); Client client = new Client(jobManagerAddress, config, program.getUserCodeClassLoader()); // set the parallelism to 10 here client.run(program, 10, true); } catch (ProgramInvocationException e) { e.printStackTrace(); } 使用CLI client,可以在命令行调用是用-p来指定,或者Java/Scala调用时在Client.run的参数中指定parallelism - System Level

# The parallelism used for programs that did not specify and other parallelism. parallelism.default: 1 可以在flink-conf.yaml中通过parallelism.default配置项给所有execution environments指定系统级的默认parallelism

example:

If run with parallelism of two in a cluster with 2 task managers, each offering 3 slots, the scheduler will use 5 task slots, like this:

However, if the base parallelism is increased to six, then the scheduler will do this (note that the sink remains at a parallelism of one in this example):

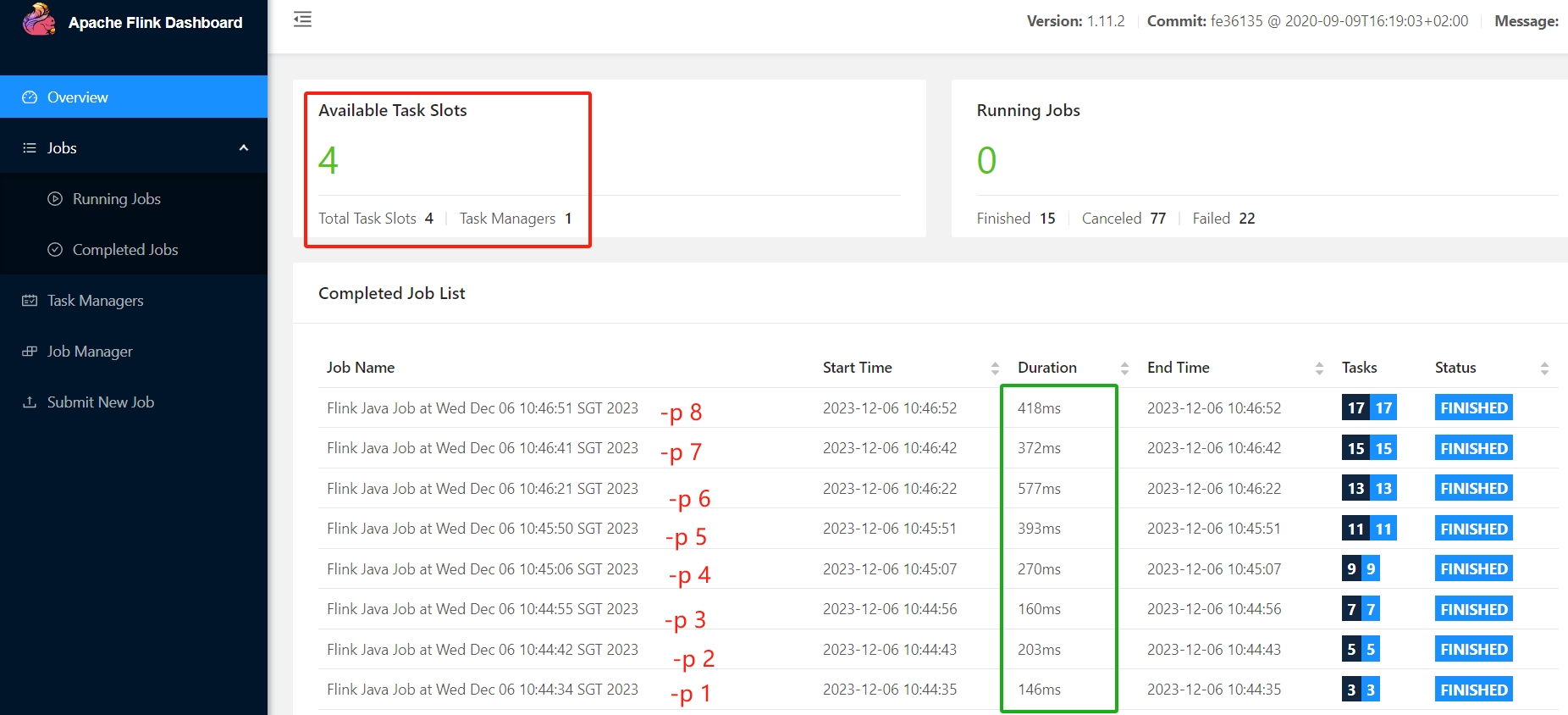

实测 1 task manager with 4 slots, run wordcount with p=2/3/4/5:

这个测试很有意思,p=1的时候最快,p=2反而慢了(因为增加了任务分割和聚合的过程吧),p从2到3,用时降低在预期之内,但是p=4反而更久(后来又测了几次,这个耗时不稳定),另外p>4居然也能成功,不过耗时变长,找到解释:

这个测试很有意思,p=1的时候最快,p=2反而慢了(因为增加了任务分割和聚合的过程吧),p从2到3,用时降低在预期之内,但是p=4反而更久(后来又测了几次,这个耗时不稳定),另外p>4居然也能成功,不过耗时变长,找到解释:

在Flink中,Slot和并行度是相互影响的。如果一个任务的并行度大于Slot的数量,那么这个任务就无法完全并行执行。在这种情况下,Flink会根据一定的算法将任务的子任务分配到不同的Slot中执行,从而实现部分并行执行。另外,如果一个任务的并行度小于Slot的数量,那么有些Slot可能会闲置,从而浪费资源。

就是说实际上p=5是把并行度是5的子任务中只有4个是真正并行的,另外一个是放在等某个slots空闲的时候再跑

日志: p=1

2023-12-06 10:44:35,152 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Receive slot request 1040904f312d051825b9205caf4c87de for job cab7d3dd7306786f754237f2771c0a62 from resource manager with leader id 00000000000000000000000000000000.

2023-12-06 10:44:35,152 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Allocated slot for 1040904f312d051825b9205caf4c87de.

..............................

2023-12-06 10:44:35,206 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 1040904f312d051825b9205caf4c87de.

2023-12-06 10:44:35,207 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (1/1).

2023-12-06 10:44:35,211 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/1) (4e89f2aef62b7d61bbafc9fff95cd9aa) switched from CREATED to DEPLOYING.

p=2

2023-12-06 10:44:43,328 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Receive slot request 5bd806ad550923769e95d4d86a4744e5 for job fd43c3b3c49c0afe0be4c6706b904cc3 from resource manager with leader id 00000000000000000000000000000000.

2023-12-06 10:44:43,328 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Allocated slot for 5bd806ad550923769e95d4d86a4744e5.

...................................

2023-12-06 10:44:43,365 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 5bd806ad550923769e95d4d86a4744e5.

2023-12-06 10:44:43,366 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (1/2).

2023-12-06 10:44:43,370 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot bae54b7f4b9437bf81acafd292978241.

2023-12-06 10:44:43,370 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot bae54b7f4b9437bf81acafd292978241.

2023-12-06 10:44:43,371 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (2/2).

2023-12-06 10:44:43,371 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/2) (1acde70402c0fe9c43e309b6f2f4b4cd) switched from CREATED to DEPLOYING.

2023-12-06 10:44:43,373 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/2) (c347c9c47c4b81fb954311a874b7d79d) switched from CREATED to DEPLOYING.

p=3

2023-12-06 10:44:56,191 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Receive slot request 413728b6858e88e8be8ddeb986d865c1 for job 1851bfb11c5dc283209bbda14a5b7a91 from resource manager with leader id 00000000000000000000000000000000.

2023-12-06 10:44:56,191 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Allocated slot for 413728b6858e88e8be8ddeb986d865c1.

.......................................

2023-12-06 10:44:56,252 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 413728b6858e88e8be8ddeb986d865c1.

2023-12-06 10:44:56,252 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (1/3).

2023-12-06 10:44:56,253 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 46bc54661e712a95825369cac5cc6af6.

2023-12-06 10:44:56,253 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 46bc54661e712a95825369cac5cc6af6.

2023-12-06 10:44:56,254 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot c7a90293611545315fb34eefd29ce7d3.

2023-12-06 10:44:56,254 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/3) (ced34a25137bf391bafb0044262f04ae) switched from CREATED to DEPLOYING.

2023-12-06 10:44:56,254 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (1/3) (ced34a25137bf391bafb0044262f04ae) [DEPLOYING].

2023-12-06 10:44:56,254 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 46bc54661e712a95825369cac5cc6af6.

2023-12-06 10:44:56,254 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (1/3) (ced34a25137bf391bafb0044262f04ae) [DEPLOYING].

2023-12-06 10:44:56,254 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (2/3).

2023-12-06 10:44:56,254 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/3) (ced34a25137bf391bafb0044262f04ae) switched from DEPLOYING to RUNNING.

2023-12-06 10:44:56,255 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot c7a90293611545315fb34eefd29ce7d3.

2023-12-06 10:44:56,255 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (3/3).

2023-12-06 10:44:56,256 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/3) (ae17aca0dbc22c6cc0b3ef75ec03e097) switched from CREATED to DEPLOYING.

2023-12-06 10:44:56,256 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (2/3) (ae17aca0dbc22c6cc0b3ef75ec03e097) [DEPLOYING].

2023-12-06 10:44:56,256 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (2/3) (ae17aca0dbc22c6cc0b3ef75ec03e097) [DEPLOYING].

2023-12-06 10:44:56,256 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/3) (ae17aca0dbc22c6cc0b3ef75ec03e097) switched from DEPLOYING to RUNNING.

2023-12-06 10:44:56,256 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (3/3) (a25fcb1abd5fa545eb5f055335661f2a) switched from CREATED to DEPLOYING.

2023-12-06 10:44:56,256 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (3/3) (a25fcb1abd5fa545eb5f055335661f2a) [DEPLOYING].

2023-12-06 10:44:56,257 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (3/3) (a25fcb1abd5fa545eb5f055335661f2a) [DEPLOYING].

2023-12-06 10:44:56,257 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (3/3) (a25fcb1abd5fa545eb5f055335661f2a) switched from DEPLOYING to RUNNING.

2023-12-06 10:44:56,297 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/3) (ae17aca0dbc22c6cc0b3ef75ec03e097) switched from RUNNING to FINISHED.

2023-12-06 10:44:56,297 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Reduce (SUM(1), at main(WordCount.java:87) (2/3) (ae17aca0dbc22c6cc0b3ef75ec03e097).

2023-12-06 10:44:56,298 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state FINISHED to JobManager for task Reduce (SUM(1), at main(WordCount.java:87) (2/3) ae17aca0dbc22c6cc0b3ef75ec03e097.

2023-12-06 10:44:56,299 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/3) (ced34a25137bf391bafb0044262f04ae) switched from RUNNING to FINISHED.

2023-12-06 10:44:56,299 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Reduce (SUM(1), at main(WordCount.java:87) (1/3) (ced34a25137bf391bafb0044262f04ae).

2023-12-06 10:44:56,299 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state FINISHED to JobManager for task Reduce (SUM(1), at main(WordCount.java:87) (1/3) ced34a25137bf391bafb0044262f04ae.

p=4

2023-12-06 10:45:07,403 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Receive slot request 02585101726cd4ee416a88da77fc4618 for job bc618f19d10f2973cbdb4e74b1def0f0 from resource manager with leader id 00000000000000000000000000000000.

2023-12-06 10:45:07,404 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Allocated slot for 02585101726cd4ee416a88da77fc4618.

................................

10:45:07,449 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 02585101726cd4ee416a88da77fc4618.

2023-12-06 10:45:07,449 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (1/4).

2023-12-06 10:45:07,452 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot eb4fbc442d7c400158fe9a9ebfa2691c.

2023-12-06 10:45:07,452 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot eb4fbc442d7c400158fe9a9ebfa2691c.

2023-12-06 10:45:07,452 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot fa67f55e86b4b171eb32e58df1ab73ac.

2023-12-06 10:45:07,452 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot eb4fbc442d7c400158fe9a9ebfa2691c.

2023-12-06 10:45:07,452 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot fa67f55e86b4b171eb32e58df1ab73ac.

2023-12-06 10:45:07,452 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 4fb95f5d1a690c5954e6a0f4d4e96828.

2023-12-06 10:45:07,453 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot eb4fbc442d7c400158fe9a9ebfa2691c.

2023-12-06 10:45:07,453 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (2/4).

2023-12-06 10:45:07,453 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/4) (a36fef48a5c8328355d9630b67947b14) switched from CREATED to DEPLOYING.

2023-12-06 10:45:07,465 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot fa67f55e86b4b171eb32e58df1ab73ac.

2023-12-06 10:45:07,466 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (3/4).

2023-12-06 10:45:07,466 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/4) (3d4d2bfeada831e87d50771c71809e63) switched from CREATED to DEPLOYING.

2023-12-06 10:45:07,468 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 4fb95f5d1a690c5954e6a0f4d4e96828.

2023-12-06 10:45:07,468 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (4/4).

2023-12-06 10:45:07,468 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (3/4) (8c8d42092ff829ac4aef87f9371116c4) switched from CREATED to DEPLOYING.

2023-12-06 10:45:07,470 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (1/4) (a36fef48a5c8328355d9630b67947b14) [DEPLOYING].

2023-12-06 10:45:07,470 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (1/4) (a36fef48a5c8328355d9630b67947b14) [DEPLOYING].

2023-12-06 10:45:07,470 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/4) (a36fef48a5c8328355d9630b67947b14) switched from DEPLOYING to RUNNING.

2023-12-06 10:45:07,470 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (3/4) (8c8d42092ff829ac4aef87f9371116c4) [DEPLOYING].

2023-12-06 10:45:07,470 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (3/4) (8c8d42092ff829ac4aef87f9371116c4) [DEPLOYING].

2023-12-06 10:45:07,470 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (3/4) (8c8d42092ff829ac4aef87f9371116c4) switched from DEPLOYING to RUNNING.

2023-12-06 10:45:07,470 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (4/4) (def950d6feb829be9cd06a581c6f3089) switched from CREATED to DEPLOYING.

2023-12-06 10:45:07,522 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (2/4) (3d4d2bfeada831e87d50771c71809e63) [DEPLOYING].

2023-12-06 10:45:07,522 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (2/4) (3d4d2bfeada831e87d50771c71809e63) [DEPLOYING].

2023-12-06 10:45:07,522 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/4) (3d4d2bfeada831e87d50771c71809e63) switched from DEPLOYING to RUNNING.

2023-12-06 10:45:07,534 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (4/4) (def950d6feb829be9cd06a581c6f3089) [DEPLOYING].

2023-12-06 10:45:07,535 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (4/4) (def950d6feb829be9cd06a581c6f3089) [DEPLOYING].

2023-12-06 10:45:07,535 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (4/4) (def950d6feb829be9cd06a581c6f3089) switched from DEPLOYING to RUNNING.

p=5

2023-12-06 10:45:51,425 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Receive slot request 36baecc30276e70935cea5246ce9738d for job 1fd73f83a716da9f0b3aa769687287e2 from resource manager with leader id 00000000000000000000000000000000.

2023-12-06 10:45:51,425 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Allocated slot for 36baecc30276e70935cea5246ce9738d.

..............................................

2023-12-06 10:45:51,463 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Offer reserved slots to the leader of job 1fd73f83a716da9f0b3aa769687287e2.

2023-12-06 10:45:51,463 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 36baecc30276e70935cea5246ce9738d.

2023-12-06 10:45:51,464 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (1/5).

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot f2b43e2529e1649dbdd931214c9f94ea.

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot f2b43e2529e1649dbdd931214c9f94ea.

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 8158510413de83b2cf98f1b610fbc85d.

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot f2b43e2529e1649dbdd931214c9f94ea.

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 8158510413de83b2cf98f1b610fbc85d.

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot a305dcf6190e4f1ce377a8dd4bff7461.

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot f2b43e2529e1649dbdd931214c9f94ea.

2023-12-06 10:45:51,475 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (2/5).

2023-12-06 10:45:51,489 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/5) (b42996ba5c2dd049f48bdf145a958284) switched from CREATED to DEPLOYING.

2023-12-06 10:45:51,490 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 8158510413de83b2cf98f1b610fbc85d.

2023-12-06 10:45:51,491 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (3/5).

2023-12-06 10:45:51,491 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/5) (d8864a3c570049ce4eca5a353564e6df) switched from CREATED to DEPLOYING.

2023-12-06 10:45:51,492 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot a305dcf6190e4f1ce377a8dd4bff7461.

2023-12-06 10:45:51,492 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (4/5).

2023-12-06 10:45:51,492 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (3/5) (c5916f065223d42e912caaedfacf84c8) switched from CREATED to DEPLOYING.

2023-12-06 10:45:51,494 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (4/5) (130f7afca9aef9f118f3a8bf2ef0faf0) switched from CREATED to DEPLOYING.

2023-12-06 10:45:51,494 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (1/5) (b42996ba5c2dd049f48bdf145a958284) [DEPLOYING].

2023-12-06 10:45:51,494 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (1/5) (b42996ba5c2dd049f48bdf145a958284) [DEPLOYING].

2023-12-06 10:45:51,494 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/5) (b42996ba5c2dd049f48bdf145a958284) switched from DEPLOYING to RUNNING.

2023-12-06 10:45:51,495 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (4/5) (130f7afca9aef9f118f3a8bf2ef0faf0) [DEPLOYING].

2023-12-06 10:45:51,495 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (4/5) (130f7afca9aef9f118f3a8bf2ef0faf0) [DEPLOYING].

2023-12-06 10:45:51,495 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (4/5) (130f7afca9aef9f118f3a8bf2ef0faf0) switched from DEPLOYING to RUNNING.

2023-12-06 10:45:51,508 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (3/5) (c5916f065223d42e912caaedfacf84c8) [DEPLOYING].

2023-12-06 10:45:51,509 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (3/5) (c5916f065223d42e912caaedfacf84c8) [DEPLOYING].

2023-12-06 10:45:51,509 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (3/5) (c5916f065223d42e912caaedfacf84c8) switched from DEPLOYING to RUNNING.

2023-12-06 10:45:51,522 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (2/5) (d8864a3c570049ce4eca5a353564e6df) [DEPLOYING].

2023-12-06 10:45:51,522 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (2/5) (d8864a3c570049ce4eca5a353564e6df) [DEPLOYING].

2023-12-06 10:45:51,522 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (2/5) (d8864a3c570049ce4eca5a353564e6df) switched from DEPLOYING to RUNNING.

2023-12-06 10:45:51,524 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (1/5) (b42996ba5c2dd049f48bdf145a958284) switched from RUNNING to FINISHED.

2023-12-06 10:45:51,524 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Reduce (SUM(1), at main(WordCount.java:87) (1/5) (b42996ba5c2dd049f48bdf145a958284).

2023-12-06 10:45:51,524 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state FINISHED to JobManager for task Reduce (SUM(1), at main(WordCount.java:87) (1/5) b42996ba5c2dd049f48bdf145a958284.

2023-12-06 10:45:51,549 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (4/5) (130f7afca9aef9f118f3a8bf2ef0faf0) switched from RUNNING to FINISHED.

2023-12-06 10:45:51,549 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Reduce (SUM(1), at main(WordCount.java:87) (4/5) (130f7afca9aef9f118f3a8bf2ef0faf0).

2023-12-06 10:45:51,549 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state FINISHED to JobManager for task Reduce (SUM(1), at main(WordCount.java:87) (4/5) 130f7afca9aef9f118f3a8bf2ef0faf0.

2023-12-06 10:45:51,550 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (3/5) (c5916f065223d42e912caaedfacf84c8) switched from RUNNING to FINISHED.

2023-12-06 10:45:51,550 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Reduce (SUM(1), at main(WordCount.java:87) (3/5) (c5916f065223d42e912caaedfacf84c8).

2023-12-06 10:45:51,550 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state FINISHED to JobManager for task Reduce (SUM(1), at main(WordCount.java:87) (3/5) c5916f065223d42e912caaedfacf84c8.

2023-12-06 10:45:51,551 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot f2b43e2529e1649dbdd931214c9f94ea.

2023-12-06 10:45:51,551 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task DataSink (collect()) (1/5).

2023-12-06 10:45:51,557 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 36baecc30276e70935cea5246ce9738d.

2023-12-06 10:45:51,557 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Reduce (SUM(1), at main(WordCount.java:87) (5/5).

.....

2023-12-06 10:45:51,562 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (5/5) (98314de2f88839e8129230fdec72e1c0) switched from CREATED to DEPLOYING.

2023-12-06 10:45:51,562 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Reduce (SUM(1), at main(WordCount.java:87) (5/5) (98314de2f88839e8129230fdec72e1c0) [DEPLOYING].

2023-12-06 10:45:51,563 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Reduce (SUM(1), at main(WordCount.java:87) (5/5) (98314de2f88839e8129230fdec72e1c0) [DEPLOYING].

2023-12-06 10:45:51,563 INFO org.apache.flink.runtime.taskmanager.Task [] - Reduce (SUM(1), at main(WordCount.java:87) (5/5) (98314de2f88839e8129230fdec72e1c0) switched from DEPLOYING to RUNNING.

.....................

不过需要注意,再高就会出问题 Flink: fail fast if job parallelism is larger than the total number of slots (opens new window)

中文解读:Apache Flink——任务(Tasks)和任务槽(Task Slots) (opens new window) Flink: fail fast if job parallelism is larger than the total number of slots (opens new window) Re: Is there any way to set the parallelism of operators like group by, join? (opens new window)

# operator chaining

An Operator Chain consists of two or more consecutive Operators without any repartitioning in between. Operators within the same Operator Chain forward records to each other directly without going through serialization or Flink’s network stack.

The sample dataflow in the figure below is executed with five subtasks, and hence with five parallel threads:

http://wuchong.me/blog/2016/05/09/flink-internals-understanding-execution-resources/

https://stackoverflow.com/questions/62664972/what-happens-if-total-parallel-instances-of-operators-are-higher-than-the-parall

# 总结:

一个程序Process可以运行在多个TM上,一个TM有多个TS(TS的总和代表支持的最高并行度),一个TS中可以运行多个sub task(task实例),每个subtask都对应一个Thread

对 TM 而言:它占用着一定数量的 CPU 和 Memory 资源,具体可通过 taskmanager.numberOfTaskSlots, taskmanager.heap.size 来配置,实际上 taskmanager.numberOfTaskSlots 只是指定 TM 的 Slot 数量,并不能隔离指定数量的 CPU 给 TM 使用。在不考虑 Slot Sharing的情况下,一个 Slot 内运行着一个 SubTask(Task 实现 Runable,SubTask 是一个执行 Task 的具体实例),所以官方建议 taskmanager.numberOfTaskSlots 配置的 Slot 数量和 CPU 相等或成比例。

当然,我们可以借助 Yarn 等调度系统,用 Flink On Yarn 的模式来为 Yarn Container 分配指定数量的 CPU 资源,以达到较严格的 CPU 隔离(Yarn 采用 Cgroup 做基于时间片的资源调度,每个 Container 内运行着一个 JM/TM 实例)。而 taskmanager.heap.size 用来配置 TM 的 Memory,如果一个 TM 有 N 个 Slot,则每个 Slot 分配到的 Memory 大小为整个 TM Memory 的 1/N,同一个 TM 内的 Slots 只有 Memory 隔离,CPU 是共享的。

对 Job 而言:一个 Job 所需的 Slot 数量大于等于 Operator 配置的最大 Parallelism 数,在保持所有 Operator 的 slotSharingGroup 一致的前提下 Job 所需的 Slot 数量与 Job 中 Operator 配置的最大 Parallelism 相等。

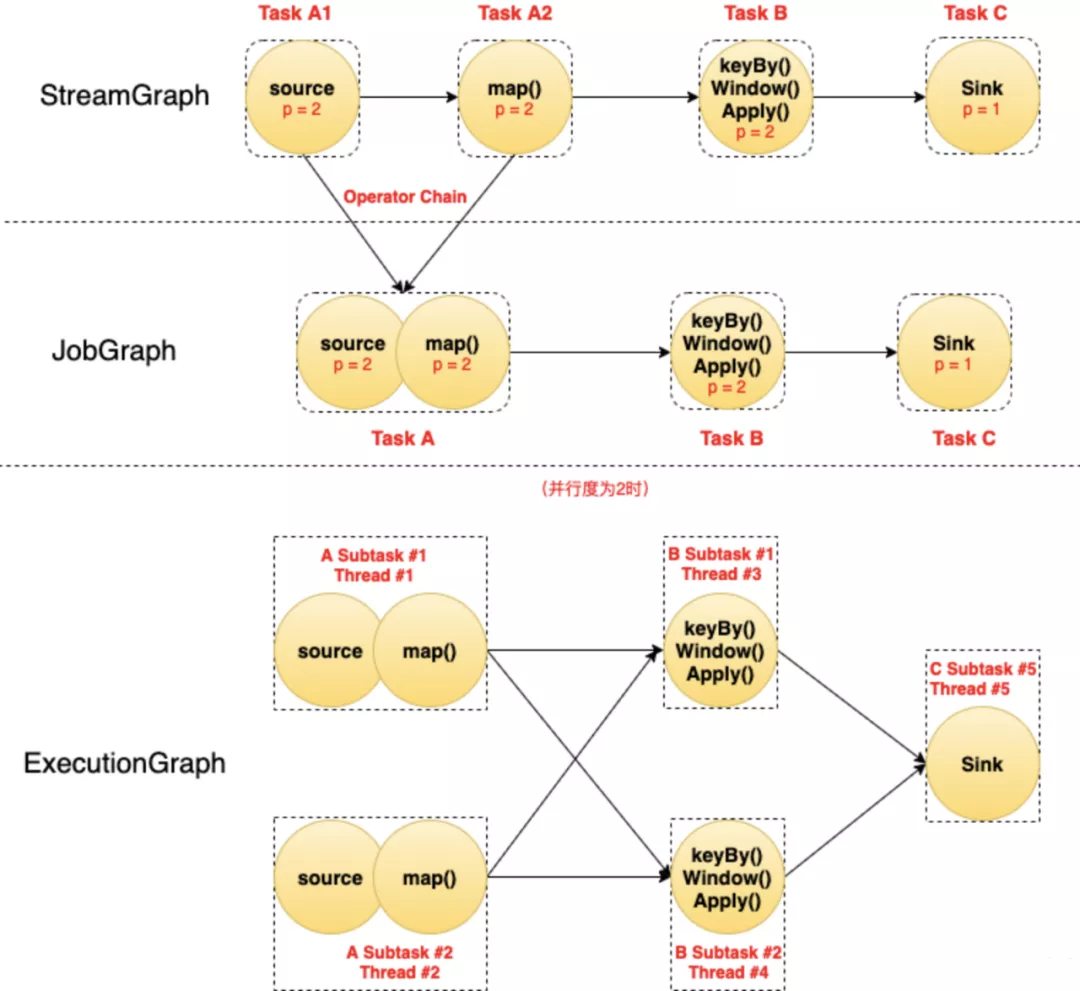

# 1.1.3 StreamGraph/JobGraph/ExecutionGraph

StreamGraph:根据用户通过 Stream API 编写的代码生成的最初的图。

- StreamNode:用来代表 operator 的类,并具有所有相关的属性,如并发度、入边和出边等。

- StreamEdge:表示连接两个StreamNode的边。

JobGraph:StreamGraph经过优化后生成了 JobGraph,提交给JobManager 的数据结构。

- JobVertex:经过优化后符合条件的多个StreamNode可能会chain在一起生成一个JobVertex,即一个JobVertex包含一个或多个operator,JobVertex的输入是JobEdge,输出是IntermediateDataSet。

- IntermediateDataSet:表示JobVertex的输出,即经过operator处理产生的数据集。producer是JobVertex,consumer是JobEdge。

- JobEdge:代表了job graph中的一条数据传输通道。source 是 IntermediateDataSet,target 是 JobVertex。即数据通过JobEdge由IntermediateDataSet传递给目标JobVertex。

ExecutionGraph:JobManager 根据 JobGraph 生成ExecutionGraph。ExecutionGraph是JobGraph的并行化版本,是调度层最核心的数据结构。

- ExecutionJobVertex:和JobGraph中的JobVertex一一对应。每一个ExecutionJobVertex都有和并发度一样多的 ExecutionVertex。

- ExecutionVertex:表示ExecutionJobVertex的其中一个并发子任务,输入是ExecutionEdge,输出是IntermediateResultPartition。

- IntermediateResult:和JobGraph中的IntermediateDataSet一一对应。一个IntermediateResult包含多个IntermediateResultPartition,其个数等于该operator的并发度

- IntermediateResultPartition:表示ExecutionVertex的一个输出分区,producer是ExecutionVertex,consumer是若干个ExecutionEdge。

- ExecutionEdge:表示ExecutionVertex的输入,source是IntermediateResultPartition,target是ExecutionVertex。source和target都只能是一个。

- Execution:是执行一个 ExecutionVertex 的一次尝试。当发生故障或者数据需要重算的情况下 ExecutionVertex 可能会有多个 ExecutionAttemptID。一个 Execution 通过 ExecutionAttemptID 来唯一标识。JM和TM之间关于 task 的部署和 task status 的更新都是通过 ExecutionAttemptID 来确定消息接受者。

物理执行图:JobManager 根据 ExecutionGraph 对 Job 进行调度后,在各个TaskManager 上部署 Task 后形成的“图”,并不是一个具体的数据结构。

- Task:Execution被调度后在分配的 TaskManager 中启动对应的 Task。Task 包裹了具有用户执行逻辑的 operator。

- ResultPartition:代表由一个Task的生成的数据,和ExecutionGraph中的IntermediateResultPartition一一对应。

- ResultSubpartition:是ResultPartition的一个子分区。每个ResultPartition包含多个ResultSubpartition,其数目要由下游消费 Task 数和 DistributionPattern 来决定。

- InputGate:代表Task的输入封装,和JobGraph中JobEdge一一对应。每个InputGate消费了一个或多个的ResultPartition。

- InputChannel:每个InputGate会包含一个以上的InputChannel,和ExecutionGraph中的ExecutionEdge一一对应,也和ResultSubpartition一对一地相连,即一个InputChannel接收一个ResultSubpartition的输出。

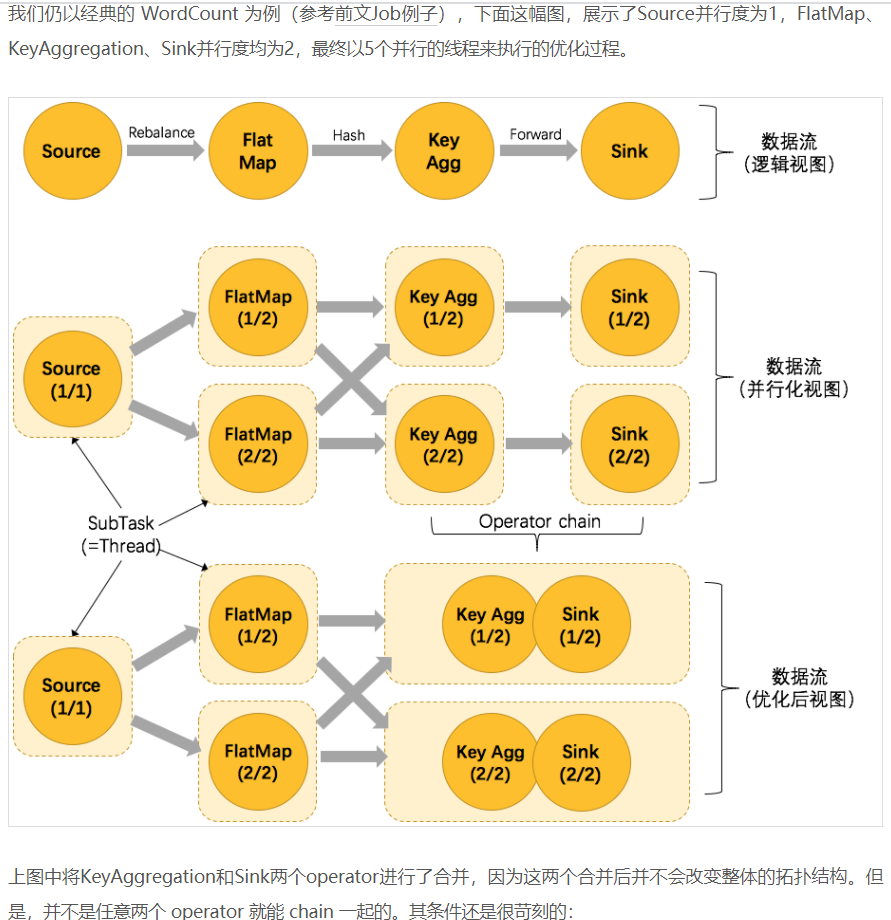

图中每个圆代表一个Operator(算子),每个虚线圆角框代表一个Task,每个虚线直角框代表一个Subtask,其中的p表示算子的并行度。 最上面是StreamGraph,在没有经过任何优化时,可以看到包含4个Operator/Task:Task A1、Task A2、Task B、Task C。

StreamGraph经过Chain优化(后面讲)之后,Task A1和Task A2两个Task合并成了一个新的Task A(可以认为合并产生了一个新的Operator),得到了中间的JobGraph。

然后以并行度为2(需要2个Slot)执行的时候,Task A产生了2个Subtask,分别占用了Thread #1和Thread #2两个线程;Task B产生了2个Subtask,分别占用了Thread #3和Thread #4两个线程;Task C产生了1个Subtask,占用了Thread #5。

# 1.2 Key Concepts

# Streams

Obviously, streams are a fundamental aspect of stream processing. However, streams can have different characteristics that affect how a stream can and should be processed. Flink is a versatile processing framework that can handle any kind of stream.

Bounded and unbounded streams: Streams can be unbounded or bounded, i.e., fixed-sized data sets. Flink has sophisticated features to process unbounded streams, but also dedicated operators to efficiently process bounded streams.

Unbounded streams have a start but no defined end. They do not terminate and provide data as it is generated. Unbounded streams must be continuously processed, i.e., events must be promptly handled after they have been ingested. It is not possible to wait for all input data to arrive because the input is unbounded and will not be complete at any point in time. Processing unbounded data often requires that events are ingested in a specific order, such as the order in which events occurred, to be able to reason about result completeness.

Bounded streams have a defined start and end. Bounded streams can be processed by ingesting all data before performing any computations. Ordered ingestion is not required to process bounded streams because a bounded data set can always be sorted. Processing of bounded streams is also known as batch processing.

Real-time and recorded streams: All data are generated as streams. There are two ways to process the data. Processing it in real-time as it is generated or persisting the stream to a storage system, e.g., a file system or object store, and processed it later. Flink applications can process recorded or real-time streams.

# State

Every non-trivial streaming application is stateful, i.e., only applications that apply transformations on individual events do not require state. Any application that runs basic business logic needs to remember events or intermediate results to access them at a later point in time, for example when the next event is received or after a specific time duration. Application state is a first-class citizen in Flink. You can see that by looking at all the features that Flink provides in the context of state handling.

- Multiple State Primitives: Flink provides state primitives for different data structures, such as atomic values, lists, or maps. Developers can choose the state primitive that is most efficient based on the access pattern of the function.

- Pluggable State Backends: Application state is managed in and checkpointed by a pluggable state backend. Flink features different state backends that store state in memory or in RocksDB, an efficient embedded on-disk data store. Custom state backends can be plugged in as well.

- Exactly-once state consistency: Flink’s checkpointing and recovery algorithms guarantee the consistency of application state in case of a failure. Hence, failures are transparently handled and do not affect the correctness of an application.

- Very Large State: Flink is able to maintain application state of several terabytes in size due to its asynchronous and incremental checkpoint algorithm.

- Scalable Applications: Flink supports scaling of stateful applications by redistributing the state to more or fewer workers.

# Time

- Event-time Mode: Applications that process streams with event-time semantics compute results based on timestamps of the events. Thereby, event-time processing allows for accurate and consistent results regardless whether recorded or real-time events are processed.

- Watermark Support: Flink employs watermarks to reason about time in event-time applications. Watermarks are also a flexible mechanism to trade-off the latency and completeness of results.

- Late Data Handling: When processing streams in event-time mode with watermarks, it can happen that a computation has been completed before all associated events have arrived. Such events are called late events. Flink features multiple options to handle late events, such as rerouting them via side outputs and updating previously completed results.

- Processing-time Mode: In addition to its event-time mode, Flink also supports processing-time semantics which performs computations as triggered by the wall-clock time of the processing machine. The processing-time mode can be suitable for certain applications with strict low-latency requirements that can tolerate approximate results.

# Other Terms

Cluster

- Flink Cluster A distributed system consisting of (typically) one JobManager and one or more Flink TaskManager processes.

- Flink Application Cluster A Flink Application Cluster is a dedicated Flink Cluster that only executes Flink Jobs from one Flink Application. The lifetime of the Flink Cluster is bound to the lifetime of the Flink Application.

- Flink Job Cluster A Flink Job Cluster is a dedicated Flink Cluster that only executes a single Flink Job. The lifetime of the Flink Cluster is bound to the lifetime of the Flink Job. This deployment mode has been deprecated since Flink 1.15.

- Flink Session Cluster A long-running Flink Cluster which accepts multiple Flink Jobs for execution. The lifetime of this Flink Cluster is not bound to the lifetime of any Flink Job. Formerly, a Flink Session Cluster was also known as a Flink Cluster in session mode. Compare to Flink Application Cluster.

Manager

- Flink TaskManager TaskManagers are the worker processes of a Flink Cluster. Tasks are scheduled to TaskManagers for execution. They communicate with each other to exchange data between subsequent Tasks.

- Flink JobManager

The JobManager is the orchestrator of a Flink Cluster. It contains three distinct components:

- Flink Resource Manager,

- Flink Dispatcher

- and one Flink JobMaster per running Flink Job. JobMasters are one of the components running in the JobManager. A JobMaster is responsible for supervising the execution of the Tasks of a single job.

Flink Application A Flink application is a Java Application that submits one or multiple Flink Jobs from the main() method (or by some other means). Submitting jobs is usually done by calling execute() on an execution environment. The jobs of an application can either be submitted to a long running Flink Session Cluster, to a dedicated Flink Application Cluster, or to a Flink Job Cluster.

Record Records are the constituent elements of a data set or data stream. Operators and Functions receive records as input and emit records as output.

Event An event is a statement about a change of the state of the domain modelled by the application. Events can be input and/or output of a stream or batch processing application. Events are special types of records.

Instance The term instance is used to describe a specific instance of a specific type (usually Operator or Function) during runtime. As Apache Flink is mostly written in Java, this corresponds to the definition of Instance or Object in Java. In the context of Apache Flink, the term parallel instance is also frequently used to emphasize that multiple instances of the same Operator or Function type are running in parallel.

- Operator Node of a Logical Graph. An Operator performs a certain operation, which is usually executed by a Function. Sources and Sinks are special Operators for data ingestion and data egress. https://nightlies.apache.org/flink/flink-docs-release-1.15/docs/dev/datastream/operators/overview/

- Function Functions are implemented by the user and encapsulate the application logic of a Flink program. Most Functions are wrapped by a corresponding Operator. https://nightlies.apache.org/flink/flink-docs-release-1.15/docs/dev/datastream/user_defined_functions/

JobResultStore The JobResultStore is a Flink component that persists the results of globally terminated (i.e. finished, cancelled or failed) jobs to a filesystem, allowing the results to outlive a finished job. These results are then used by Flink to determine whether jobs should be subject to recovery in highly-available clusters.

Managed State Managed State describes application state which has been registered with the framework. For Managed State, Apache Flink will take care about persistence and rescaling among other things.

Checkpoint Storage The location where the State Backend will store its snapshot during a checkpoint (Java Heap of JobManager or Filesystem).

Partition A partition is an independent subset of the overall data stream or data set. A data stream or data set is divided into partitions by assigning each record to one or more partitions. Partitions of data streams or data sets are consumed by Tasks during runtime. A transformation which changes the way a data stream or data set is partitioned is often called repartitioning.

(Runtime) Execution Mode DataStream API programs can be executed in one of two execution modes: BATCH or STREAMING. See Execution Mode for more details.

State Backend For stream processing programs, the State Backend of a Flink Job determines how its state is stored on each TaskManager (Java Heap of TaskManager or (embedded) RocksDB).

Table Program A generic term for pipelines declared with Flink’s relational APIs (Table API or SQL).

Transformation A Transformation is applied on one or more data streams or data sets and results in one or more output data streams or data sets. A transformation might change a data stream or data set on a per-record basis, but might also only change its partitioning or perform an aggregation. While Operators and Functions are the “physical” parts of Flink’s API, Transformations are only an API concept. Specifically, most transformations are implemented by certain Operators.

# 2. Deployment

# 2.0 Deployment Mode

Deployment Modes (opens new window)

# Flink Client

Compiles batch or streaming applications into a dataflow graph, which it then submits to the JobManager.

Implementation:

$ ./bin/flink list

./bin/flink run -p 2 ./examples/*WordCount-java*.jar

post http://localhost:8081/jars/${jarId}/run

# JobManager

JobManager is the name of the central work coordination component of Flink. It has implementations for different resource providers, which differ on high-availability, resource allocation behavior and supported job submission modes.

JobManager modes for job submissions:

- Application Mode:

runs the cluster exclusively for one application. The job's main method (or client) gets executed on the JobManager. Calling

execute/executeAsyncmultiple times in an application is supported. -Per-Job Mode: runs the cluster exclusively for one job. The job's main method (or client) runs only prior to the cluster creation. - Session Mode: one JobManager instance manages multiple jobs sharing the same cluster of TaskManagers

Implementation:

- Standalone (this is the barebone mode that requires just JVMs to be launched. Deployment with Docker, Docker Swarm / Compose, non-native Kubernetes and other models is possible through manual setup in this mode)

- Kubernetes

- YARN

# TaskManager

TaskManagers are the services actually performing the work of a Flink job.

# Optional External Components - High Availability Service Provider

Flink's JobManager can be run in high availability mode which allows Flink to recover from JobManager faults. In order to failover faster, multiple standby JobManagers can be started to act as backups.

- Zookeeper

- Kubernetes HA

# Optional External Components - File Storage and Persistency

For checkpointing (recovery mechanism for streaming jobs) Flink relies on external [file storage systems](https://nightlies.apache.org/flink/flink-docs-release-1.18/docs/deployment/filesystems/overview/)

- Local File System Flink has built-in support for the file system of the local machine, including any NFS or SAN drives mounted into that local file system. It can be used by default without additional configuration. Local files are referenced with the file:// URI scheme.

- hadoop-compatible

- Pluggable File Systems (Amazon S3, Aliyun OSS and Azure Blob Storage.)

# Optional External Components - Resource Provider

Flink can be deployed through different Resource Provider Frameworks, such as Kubernetes or YARN. See JobManager implementations above.

# Optional External Components - Metrics Storage

# Optional External Components - Application-level data sources and sinks

While application-level data sources and sinks are not technically part of the deployment of Flink cluster components, they should be considered when planning a new Flink production deployment. Colocating frequently used data with Flink can have significant performance benefits

Predefined Sources (opens new window) and Sinks (opens new window) A few basic data sources and sinks are built into Flink and are always available. The predefined data sources include reading from files, directories, and sockets, and ingesting data from collections and iterators. The predefined data sinks support writing to files, to stdout and stderr, and to sockets.

Bundled Connectors Connectors provide code for interfacing with various third-party systems. Currently these systems are supported:

- Apache Kafka (source/sink) (opens new window)

- Apache Cassandra (source/sink) (opens new window)

- Amazon DynamoDB (sink) (opens new window)

- Amazon Kinesis Data Streams (source/sink) (opens new window)

- Amazon Kinesis Data Firehose (sink) (opens new window)

- DataGen (source) (opens new window)

- Elasticsearch (sink) (opens new window)

- Opensearch (sink) (opens new window)

- FileSystem (source/sink) (opens new window)

- RabbitMQ (source/sink) (opens new window)

- Google PubSub (source/sink) (opens new window)

- Hybrid Source (source) (opens new window)

- Apache Pulsar (source) (opens new window)

- JDBC (sink) (opens new window)

- MongoDB (source/sink) (opens new window)

Connectors in Apache Bahir Additional streaming connectors for Flink are being released through Apache Bahir, including:

- Apache ActiveMQ (source/sink) (opens new window)

- Apache Flume (sink) (opens new window)

- Redis (sink) (opens new window)

- Akka (sink) (opens new window)

- Netty (source) (opens new window)

- Data Enrichment via Async I/O (opens new window) Using a connector isn’t the only way to get data in and out of Flink. One common pattern is to query an external database or web service in a Map or FlatMap in order to enrich the primary datastream. Flink offers an API for Asynchronous I/O to make it easier to do this kind of enrichment efficiently and robustly.

# 2.1 Resource Provider Standalone Mode: Local Standalone

The standalone mode is the most barebone way of deploying Flink: The Flink services described in the deployment overview are just launched as processes on the operating system. Unlike deploying Flink with a resource provider such as Kubernetes or YARN, you have to take care of restarting failed processes, or allocation and de-allocation of resources during operation.

Deployment/Standalone (opens new window)

# Insall

# Session Mode

$ java -version

$ tar -xzf flink-*.tgz

$ cd flink-* && ls -l

$ ./bin/start-cluster.sh //started 2 processes: A JVM for the JobManager, and a JVM for the TaskManager.

localhost:8081 to view the Flink dashboard

$ ./bin/stop-cluster.sh

$ ./bin/flink run examples/streaming/WordCount.jar

$ tail log/flink-*-taskexecutor-*.out

WordCount: https://github.com/apache/flink/blob/master/flink-examples/flink-examples-streaming/src/main/java/org/apache/flink/streaming/examples/wordcount/WordCount.java

public class WordCount

{

public static void main(String[] args) throws Exception {

MultipleParameterTool params = MultipleParameterTool.fromArgs(args);

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.getConfig().setGlobalJobParameters(params);

DataStreamSource dataStreamSource = null;

if (params.has("input")) {

DataStream dataStream;

for (String input : params.getMultiParameterRequired("input")) {

if (dataStreamSource == null) {

DataStreamSource dataStreamSource1; dataStreamSource1 = env.readTextFile(input); continue;

}

dataStream = dataStreamSource1.union(new DataStream[] { env.readTextFile(input) });

}

Preconditions.checkNotNull(dataStream, "Input DataStream should not be null.");

} else {

System.out.println("Executing WordCount example with default input data set.");

System.out.println("Use --input to specify file input.");

dataStreamSource = env.fromElements(WordCountData.WORDS);

}

SingleOutputStreamOperator singleOutputStreamOperator = dataStreamSource.flatMap(new Tokenizer()).keyBy(value -> (String)value.f0).sum(1);

if (params.has("output")) {

singleOutputStreamOperator.writeAsText(params.get("output"));

} else {

System.out.println("Printing result to stdout. Use --output to specify output path.");

singleOutputStreamOperator.print();

}

env.execute("Streaming WordCount");

}

public static final class Tokenizer extends Object implements FlatMapFunction<String, Tuple2<String, Integer>> {

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) { // Byte code:

// 0: aload_1

// 1: invokevirtual toLowerCase : ()Ljava/lang/String;

// 4: ldc '\W+'

// 6: invokevirtual split : (Ljava/lang/String;)[Ljava/lang/String;

// 9: astore_3

// 10: aload_3

// 11: astore #4

// 13: aload #4

// 15: arraylength

// 16: istore #5

// 18: iconst_0

// 19: istore #6

// 21: iload #6

// 23: iload #5

// 25: if_icmpge -> 68

// 28: aload #4

// 30: iload #6

// 32: aaload

// 33: astore #7

// 35: aload #7

// 37: invokevirtual length : ()I

// 40: ifle -> 62

// 43: aload_2

// 44: new org/apache/flink/api/java/tuple/Tuple2

// 47: dup

// 48: aload #7

// 50: iconst_1

// 51: invokestatic valueOf : (I)Ljava/lang/Integer;

// 54: invokespecial <init> : (Ljava/lang/Object;Ljava/lang/Object;)V

// 57: invokeinterface collect : (Ljava/lang/Object;)V

// 62: iinc #6, 1

// 65: goto -> 21

// 68: return

// Line number table:

// Java source line number -> byte code offset

// #115 -> 0

// #118 -> 10

// #119 -> 35

// #120 -> 43

// #118 -> 62

// #123 -> 68

// Local variable table:

// start length slot name descriptor

// 35 27 7 token Ljava/lang/String;

// 0 69 0 this Lorg/apache/flink/streaming/examples/wordcount/WordCount$Tokenizer;

// 0 69 1 value Ljava/lang/String;

// 0 69 2 out Lorg/apache/flink/util/Collector;

// 10 59 3 tokens [Ljava/lang/String;

// Local variable type table:

// start length slot name signature

// 0 69 2 out Lorg/apache/flink/util/Collector<Lorg/apache/flink/api/java/tuple/Tuple2<Ljava/lang/String;Ljava/lang/Integer;>;>; }

}

}

# Application Mode

The application jar file needs to be available in the classpath. The easiest approach to achieve that is putting the jar into the lib/ folder:

$ cp ./examples/streaming/TopSpeedWindowing.jar lib/

To start a Flink JobManager with an embedded application, we use the bin/standalone-job.sh script. We demonstrate this mode by locally starting the TopSpeedWindowing.jar example, running on a single TaskManager.

$ ./bin/standalone-job.sh start --job-classname org.apache.flink.streaming.examples.windowing.TopSpeedWindowing

The web interface is now available at localhost:8081. However, the application won’t be able to start, because there are no TaskManagers running yet:

$ ./bin/taskmanager.sh start //Note: You can start multiple TaskManagers, if your application needs more resources.

Stopping the services is also supported via the scripts. Call them multiple times if you want to stop multiple instances, or use stop-all:

$ ./bin/taskmanager.sh stop

$ ./bin/standalone-job.sh stop

# Multiple Instances

bin/start-cluster.sh and bin/stop-cluster.sh rely on conf/masters and conf/workers to determine the number of cluster component instances.

Example 1: Start a cluster with 2 TaskManagers locally

conf/masters contents:

localhost

conf/workers contents:

localhost

localhost

If password-less SSH access to the listed machines is configured, and they share the same directory structure, the scripts also support starting and stopping instances remotely.

Example 2: Start a distributed cluster JobManagers

This assumes a cluster with 4 machines (master1, worker1, worker2, worker3), which all can reach each other over the network.

conf/masters contents:

master1

conf/workers contents:

worker1

worker2

worker3

Note that the configuration key jobmanager.rpc.address needs to be set to master1 for this to work.

High-Availability Example 3: Standalone HA Cluster with 2 JobManagers

1. In order to enable HA for a standalone cluster, you have to use the ZooKeeper HA services.

Configure high availability mode and ZooKeeper quorum in conf/flink-conf.yaml:

high-availability.type: zookeeper

high-availability.zookeeper.quorum: localhost:2181

high-availability.zookeeper.path.root: /flink

high-availability.cluster-id: /cluster_one # important: customize per cluster

high-availability.storageDir: hdfs:///flink/recovery

2. In order to start an HA-cluster configure the masters file in conf/masters, the masters file contains all hosts, on which JobManagers are started, and the ports to which the web user interface binds.

Configure masters in conf/masters:

localhost:8081

localhost:8082

3. Configure ZooKeeper server in conf/zoo.cfg (currently it’s only possible to run a single ZooKeeper server per machine):

server.0=localhost:2888:3888

4. Start ZooKeeper quorum:

$ ./bin/start-zookeeper-quorum.sh

Starting zookeeper daemon on host localhost.

5. Start an HA-cluster:

$ ./bin/start-cluster.sh

Starting HA cluster with 2 masters and 1 peers in ZooKeeper quorum.

Starting standalonesession daemon on host localhost.

Starting standalonesession daemon on host localhost.

Starting taskexecutor daemon on host localhost.

6. Stop ZooKeeper quorum and cluster:

$ ./bin/stop-cluster.sh

Stopping taskexecutor daemon (pid: 7647) on localhost.

Stopping standalonesession daemon (pid: 7495) on host localhost.

Stopping standalonesession daemon (pid: 7349) on host localhost.

$ ./bin/stop-zookeeper-quorum.sh

Stopping zookeeper daemon (pid: 7101) on host localhost.

By default, the JobManager will pick a random port for inter process communication. You can change this via the high-availability.jobmanager.port key. This key accepts single ports (e.g. 50010), ranges (50000-50025), or a combination of both (50010,50011,50020-50025,50050-50075).

# Log Analysis

# Job Manager Log

vim log/flink-root-standalonesession-0-vm01.log

# 启动

2022-05-26 14:14:26,762 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint [] - Rest endpoint listening at localhost:8081

2022-05-26 14:14:26,763 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint [] - http://localhost:8081 was granted leadership with leaderSessionID=00000000-0000-0000-0000-000000000000

2022-05-26 14:14:26,765 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint [] - Web frontend listening at http://localhost:8081.

2022-05-26 14:14:26,816 INFO org.apache.flink.runtime.dispatcher.runner.DefaultDispatcherRunner [] - DefaultDispatcherRunner was granted leadership with leader id 00000000-0000-0000-0000-000000000000. Creating new DispatcherLeaderProcess.

2022-05-26 14:14:26,823 INFO org.apache.flink.runtime.dispatcher.runner.SessionDispatcherLeaderProcess [] - Start SessionDispatcherLeaderProcess.

2022-05-26 14:14:26,825 INFO org.apache.flink.runtime.resourcemanager.ResourceManagerServiceImpl [] - Starting resource manager service.

2022-05-26 14:14:26,827 INFO org.apache.flink.runtime.dispatcher.runner.SessionDispatcherLeaderProcess [] - Recover all persisted job graphs.

2022-05-26 14:14:26,827 INFO org.apache.flink.runtime.resourcemanager.ResourceManagerServiceImpl [] - Resource manager service is granted leadership with session id 00000000-0000-0000-0000-000000000000.

2022-05-26 14:14:26,827 INFO org.apache.flink.runtime.dispatcher.runner.SessionDispatcherLeaderProcess [] - Successfully recovered 0 persisted job graphs.

2022-05-26 14:14:27,481 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService [] - Starting RPC endpoint for org.apache.flink.runtime.dispatcher.StandaloneDispatcher at akka://flink/user/rpc/dispatcher_0 .

2022-05-26 14:14:27,516 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService [] - Starting RPC endpoint for org.apache.flink.runtime.resourcemanager.StandaloneResourceManager at akka://flink/user/rpc/resourcemanager_1 .

2022-05-26 14:14:27,543 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Starting the resource manager.

2022-05-26 14:14:27,927 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Registering TaskManager with ResourceID 10.136.100.48:35016-a4d337 (akka.tcp://[email protected]:35016/user/rpc/taskmanager_0) at ResourceManager

# 接收job,create->running/schedule->deploy

2022-05-27 16:13:00,098 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher [] - Received JobGraph submission 'Streaming WordCount' (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:00,100 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher [] - Submitting job 'Streaming WordCount' (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:00,151 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService [] - Starting RPC endpoint for org.apache.flink.runtime.jobmaster.JobMaster at akka://flink/user/rpc/jobmanager_2 .

2022-05-27 16:13:00,165 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Initializing job 'Streaming WordCount' (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:00,211 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Using restart back off time strategy NoRestartBackoffTimeStrategy for Streaming WordCount (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:00,279 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Running initialization on master for job Streaming WordCount (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:00,279 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Successfully ran initialization on master in 0 ms.

2022-05-27 16:13:00,321 INFO org.apache.flink.runtime.scheduler.adapter.DefaultExecutionTopology [] - Built 1 pipelined regions in 0 ms

2022-05-27 16:13:00,393 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - No state backend has been configured, using default (HashMap) org.apache.flink.runtime.state.hashmap.HashMapStateBackend@3a91353f

2022-05-27 16:13:00,394 INFO org.apache.flink.runtime.state.StateBackendLoader [] - State backend loader loads the state backend as HashMapStateBackend

2022-05-27 16:13:00,396 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Checkpoint storage is set to 'jobmanager'

2022-05-27 16:13:00,417 INFO org.apache.flink.runtime.checkpoint.CheckpointCoordinator [] - No checkpoint found during restore.

2022-05-27 16:13:00,444 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Using failover strategy org.apache.flink.runtime.executiongraph.failover.flip1.RestartPipelinedRegionFailoverStrategy@6e20b54e for Streaming WordCount (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:00,460 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Starting execution of job 'Streaming WordCount' (f69c1ca4892ecbc08d4247ded254f467) under job master id 00000000000000000000000000000000.

2022-05-27 16:13:00,463 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Starting scheduling with scheduling strategy [org.apache.flink.runtime.scheduler.strategy.PipelinedRegionSchedulingStrategy]

2022-05-27 16:13:00,463 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Job Streaming WordCount (f69c1ca4892ecbc08d4247ded254f467) switched from state CREATED to RUNNING.

2022-05-27 16:13:00,468 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: Collection Source -> Flat Map (1/1) (c83c41ff9f43c36e7a6aea483e073ec1) switched from CREATED to SCHEDULED.

2022-05-27 16:13:00,468 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Keyed Aggregation -> Sink: Print to Std. Out (1/1) (a602bd7b23ece40a69422f7b36701083) switched from CREATED to SCHEDULED.

2022-05-27 16:13:00,492 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Connecting to ResourceManager akka.tcp://flink@localhost:6123/user/rpc/resourcemanager_*(00000000000000000000000000000000)

2022-05-27 16:13:00,499 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Resolved ResourceManager address, beginning registration

2022-05-27 16:13:00,502 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Registering job manager [email protected]://flink@localhost:6123/user/rpc/jobmanager_2 for job f69c1ca4892ecbc08d4247ded254f467.

2022-05-27 16:13:00,509 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Registered job manager [email protected]://flink@localhost:6123/user/rpc/jobmanager_2 for job f69c1ca4892ecbc08d4247ded254f467.

2022-05-27 16:13:00,512 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - JobManager successfully registered at ResourceManager, leader id: 00000000000000000000000000000000.

2022-05-27 16:13:00,514 INFO org.apache.flink.runtime.resourcemanager.slotmanager.DeclarativeSlotManager [] - Received resource requirements from job f69c1ca4892ecbc08d4247ded254f467: [ResourceRequirement{resourceProfile=ResourceProfile{UNKNOWN}, numberOfRequiredSlots=1}]

2022-05-27 16:13:00,636 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: Collection Source -> Flat Map (1/1) (c83c41ff9f43c36e7a6aea483e073ec1) switched from SCHEDULED to DEPLOYING.

2022-05-27 16:13:00,637 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Deploying Source: Collection Source -> Flat Map (1/1) (attempt #0) with attempt id c83c41ff9f43c36e7a6aea483e073ec1 to 10.136.100.48:35016-a4d337 @ vm-v08 (dataPort=59281) with allocation id 3b41f2b6c9f47bf531ac47e91afde9fb

2022-05-27 16:13:00,646 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Keyed Aggregation -> Sink: Print to Std. Out (1/1) (a602bd7b23ece40a69422f7b36701083) switched from SCHEDULED to DEPLOYING.

2022-05-27 16:13:00,646 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Deploying Keyed Aggregation -> Sink: Print to Std. Out (1/1) (attempt #0) with attempt id a602bd7b23ece40a69422f7b36701083 to 10.136.100.48:35016-a4d337 @ vm-v08 (dataPort=59281) with allocation id 3b41f2b6c9f47bf531ac47e91afde9fb

2022-05-27 16:13:00,905 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Keyed Aggregation -> Sink: Print to Std. Out (1/1) (a602bd7b23ece40a69422f7b36701083) switched from DEPLOYING to INITIALIZING.

2022-05-27 16:13:00,908 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: Collection Source -> Flat Map (1/1) (c83c41ff9f43c36e7a6aea483e073ec1) switched from DEPLOYING to INITIALIZING.

2022-05-27 16:13:01,166 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: Collection Source -> Flat Map (1/1) (c83c41ff9f43c36e7a6aea483e073ec1) switched from INITIALIZING to RUNNING.

2022-05-27 16:13:01,196 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Keyed Aggregation -> Sink: Print to Std. Out (1/1) (a602bd7b23ece40a69422f7b36701083) switched from INITIALIZING to RUNNING.

2022-05-27 16:13:01,223 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: Collection Source -> Flat Map (1/1) (c83c41ff9f43c36e7a6aea483e073ec1) switched from RUNNING to FINISHED.

2022-05-27 16:13:01,246 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Keyed Aggregation -> Sink: Print to Std. Out (1/1) (a602bd7b23ece40a69422f7b36701083) switched from RUNNING to FINISHED.

2022-05-27 16:13:01,249 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Job Streaming WordCount (f69c1ca4892ecbc08d4247ded254f467) switched from state RUNNING to FINISHED.

2022-05-27 16:13:01,249 INFO org.apache.flink.runtime.checkpoint.CheckpointCoordinator [] - Stopping checkpoint coordinator for job f69c1ca4892ecbc08d4247ded254f467.

2022-05-27 16:13:01,250 INFO org.apache.flink.runtime.resourcemanager.slotmanager.DeclarativeSlotManager [] - Clearing resource requirements of job f69c1ca4892ecbc08d4247ded254f467

2022-05-27 16:13:01,279 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher [] - Job f69c1ca4892ecbc08d4247ded254f467 reached terminal state FINISHED.

2022-05-27 16:13:01,314 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Stopping the JobMaster for job 'Streaming WordCount' (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:01,320 INFO org.apache.flink.runtime.checkpoint.StandaloneCompletedCheckpointStore [] - Shutting down

2022-05-27 16:13:01,322 INFO org.apache.flink.runtime.jobmaster.slotpool.DefaultDeclarativeSlotPool [] - Releasing slot [3b41f2b6c9f47bf531ac47e91afde9fb].

2022-05-27 16:13:01,328 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Close ResourceManager connection 4a2508526d0621625a55daa90f37e499: Stopping JobMaster for job 'Streaming WordCount' (f69c1ca4892ecbc08d4247ded254f467).

2022-05-27 16:13:01,330 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Disconnect job manager [email protected]://flink@localhost:6123/user/rpc/jobmanager_2 for job f69c1ca4892ecbc08d4247ded254f467 from the resource manager.

# Task Manager Log

vim flink-root-taskexecutor-0-vm01.log

# 启动

INFO [] - Final TaskExecutor Memory configuration:

INFO [] - Total Process Memory: 1.688gb (1811939328 bytes)

INFO [] - Total Flink Memory: 1.250gb (1342177280 bytes)

INFO [] - Total JVM Heap Memory: 512.000mb (536870902 bytes)

INFO [] - Framework: 128.000mb (134217728 bytes)

INFO [] - Task: 384.000mb (402653174 bytes)

INFO [] - Total Off-heap Memory: 768.000mb (805306378 bytes)

INFO [] - Managed: 512.000mb (536870920 bytes)

INFO [] - Total JVM Direct Memory: 256.000mb (268435458 bytes)

INFO [] - Framework: 128.000mb (134217728 bytes)

INFO [] - Task: 0 bytes

INFO [] - Network: 128.000mb (134217730 bytes)

INFO [] - JVM Metaspace: 256.000mb (268435456 bytes)

INFO [] - JVM Overhead: 192.000mb (201326592 bytes)

2022-05-26 14:14:23,738 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner [] - --------------------------------------------------------------------------------