回目录 《kafka》

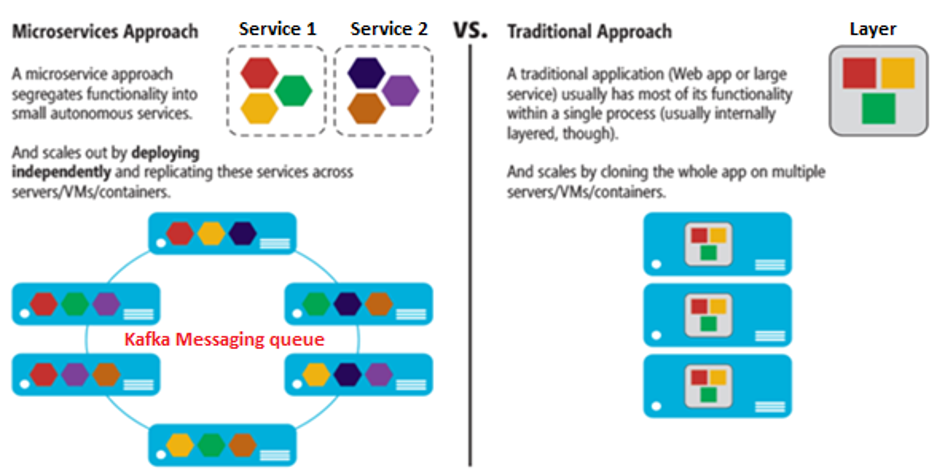

cloud DMS PAAS VS original opensource KAFKA? EXAMPLE: huawei DMS (opens new window)

# 1. Basic concepts

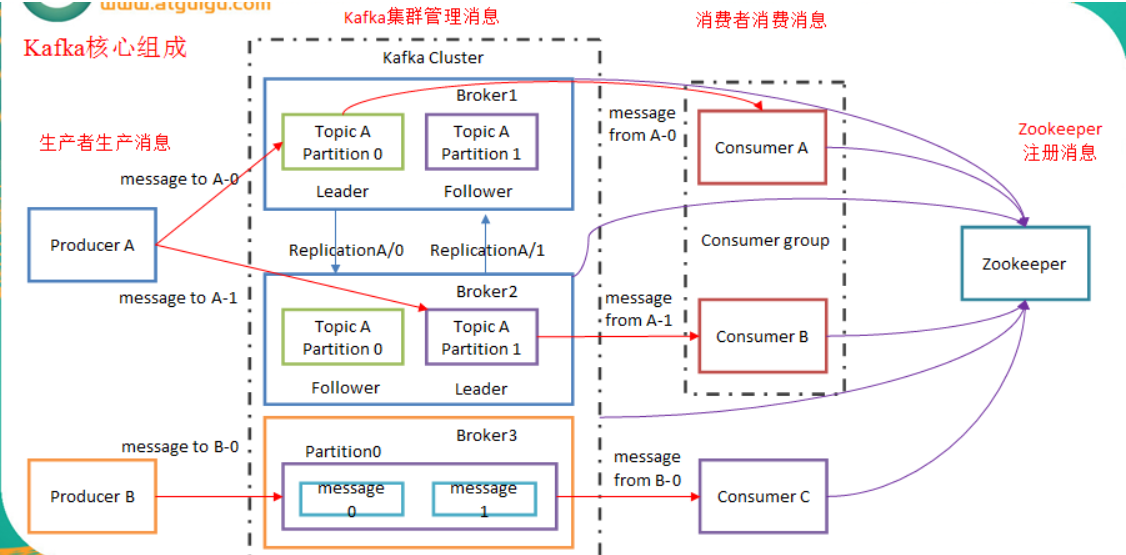

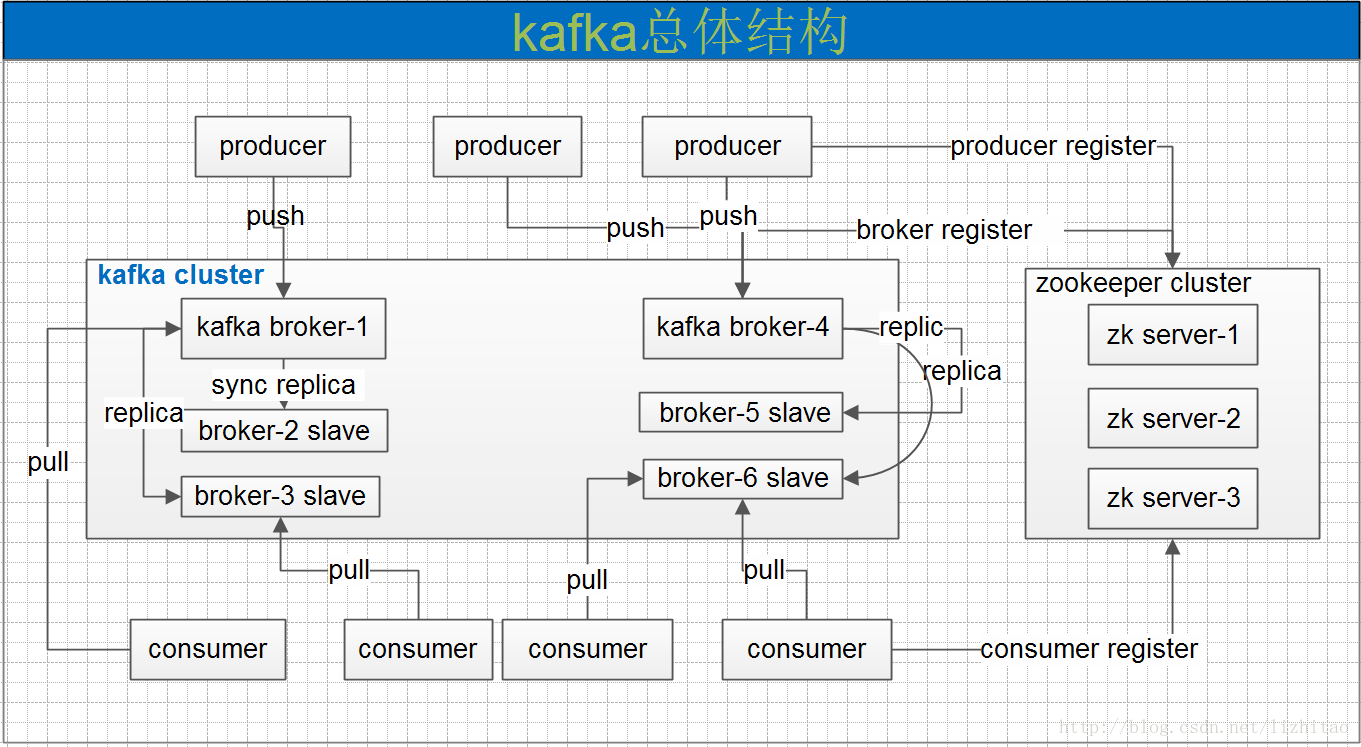

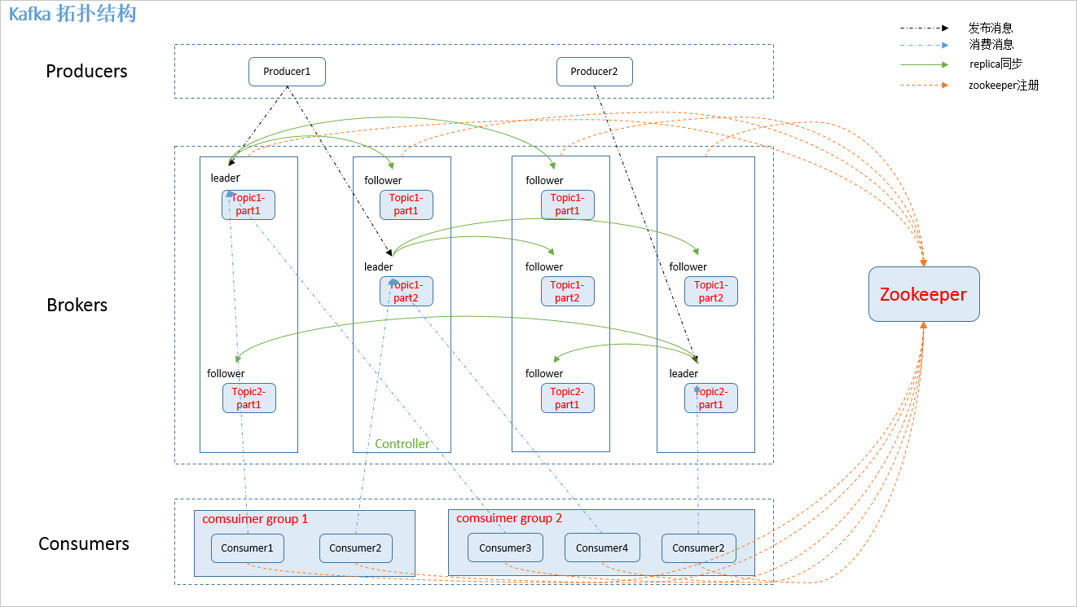

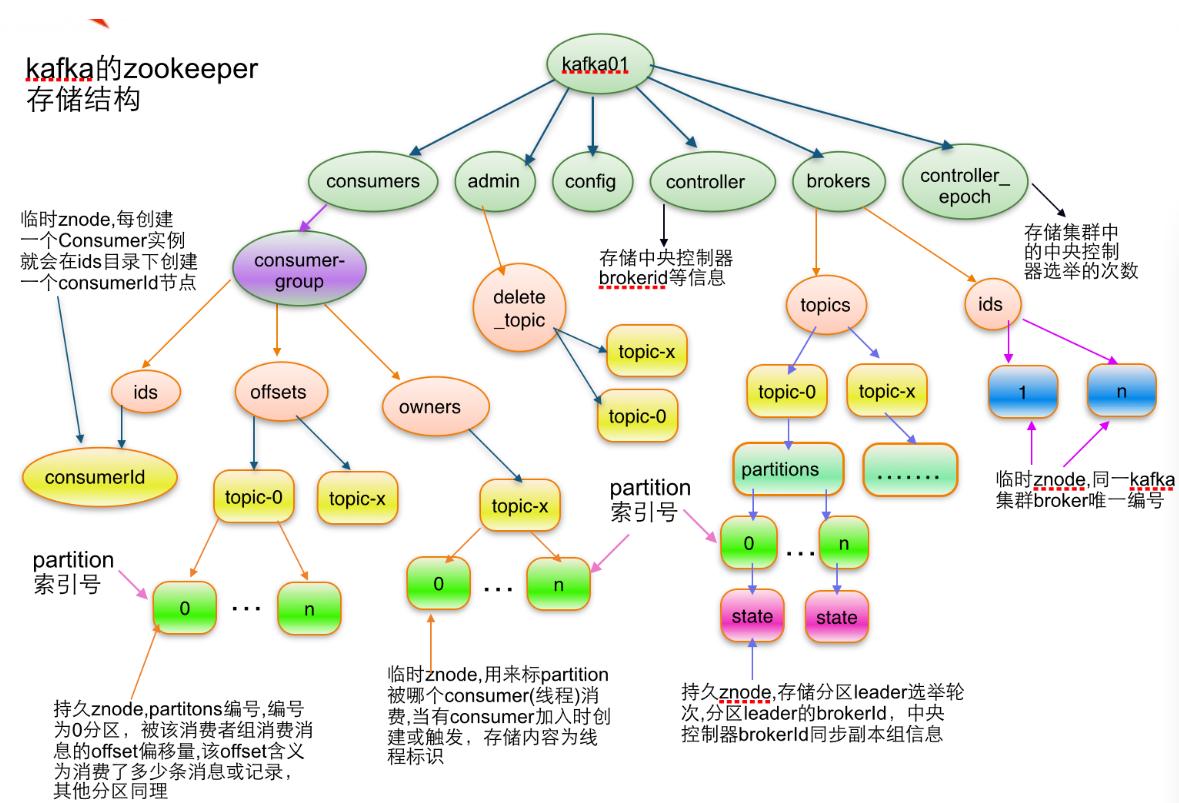

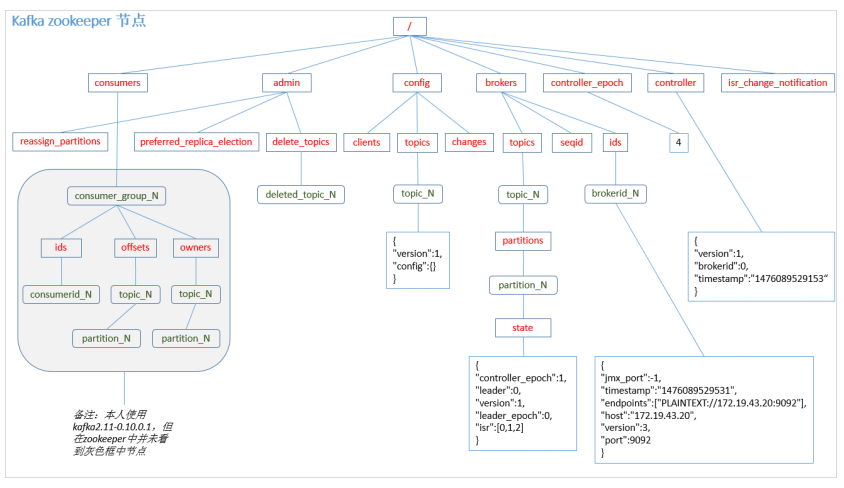

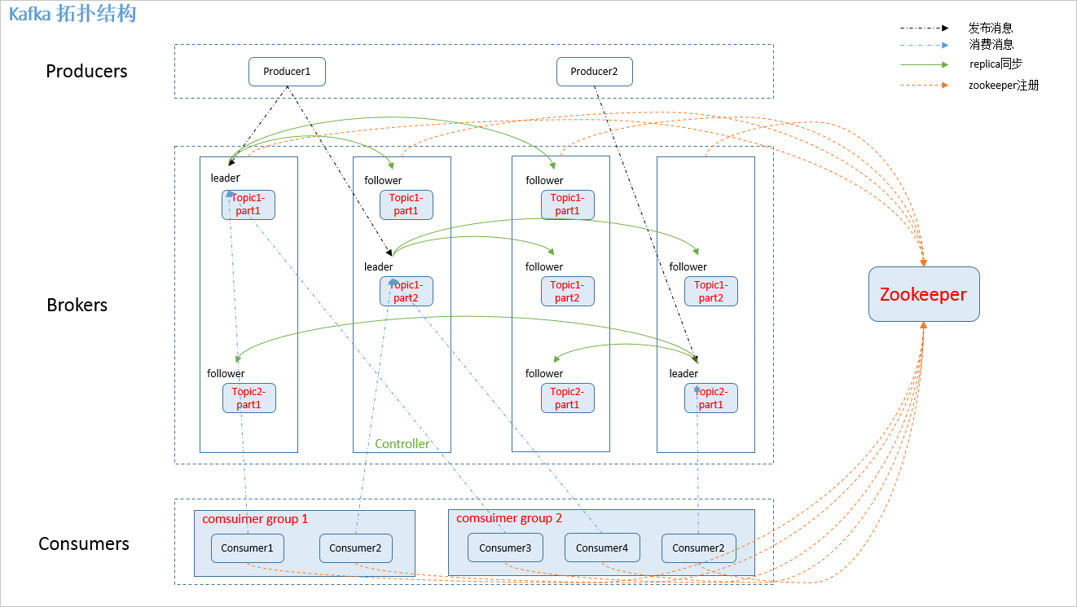

zookeeper: kafka 通过 zookeeper 来存储集群的 meta 信息。

broker: kafka 集群中包含的服务器。Each server acts as a leader for some of its partitions and a follower for others so load is well balanced within the cluster.

Kafka service = kafka broker, kafka cluster=multiple instances of kafka broker

controller: kafka 集群中的其中一个服务器,用来进行 leader election 以及 各种 failover。

producer: 消息生产者,发布消息到 kafka 集群的终端或服务。

consumer: 从 kafka 集群中消费消息的终端或服务。

Topics & logs (Logical concept): 每条发布到 kafka 集群的消息属于的类别,即 kafka 是面向 topic 的。

- LEO:: log end offset offset+1

- ISR:: in-sync replicas

- internal topic:

__consumer_offsets transaction_offsets

key: <consumer_group>,<topic>,<partition> value: <offset>,<partition_leader_epoch>,<metadata>,<timestamp>

Partition (Physical concept): 是物理上的概念,每个 topic 包含一个或多个 partition。kafka 分配的单位是 partition。

Ordering (global order == one partition only)

Each partition is a totally ordered log, but there is no global ordering between partitions (other than perhaps some wall-clock time you might include in your messages). The assignment of the messages to a particular partition is controllable by the writer, with most users choosing to partition by some kind of key (e.g. user id). Partitioning allows log appends to occur without co-ordination between shards and allows the throughput of the system to scale linearly with the Kafka cluster size.

replica:partition 的副本,保障 partition 的高可用。

high watermark: indicated the offset of messages that are fully replicated, while the end-of-log offset might be larger if there are newly appended records to the leader partition which are not replicated yet.

leader:replica 中的一个角色, producer 和 consumer 只跟 leader 交互。(In Kafka 2.3 and older, you can only consume from the leader -- this is by design. Replication is for fault-tolerance only.Since Kafka 2.4, it is possible to configure consumers to read from the closest replica. This may help improve latency, and also decrease network costs if using the cloud.)

Leader = topic leader = leader of replicas

follower:replica 中的一个角色,从 leader 中复制数据。

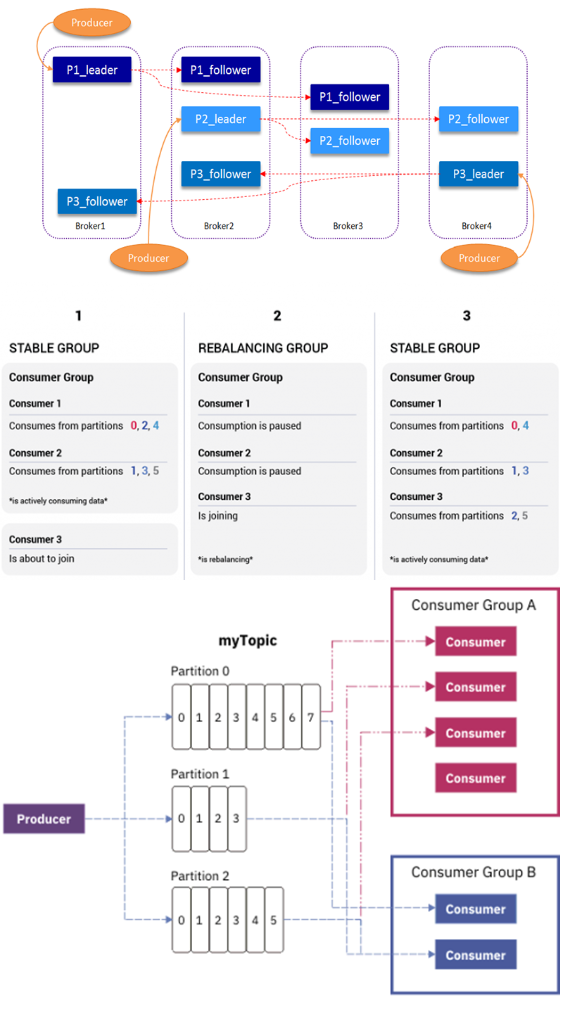

Consumer group: high-level consumer API 中,每个 consumer 都属于一个 consumer group,每条消息只能被 consumer group 中的一个 Consumer 消费,但可以被多个 consumer group 消费。

- consumer group leader:

is one of the consumer in a consumer group. - consumer coordinator(client side): each kafkaconsumer instance has a private member of consumer coordinator

- group coordinator(server side): is nothing but one of the brokers which receives heartbeats (or polling for messages) from all consumers of a consumer group. Every consumer group has a group coordinator. If a consumer stops sending heartbeats, the coordinator will trigger a rebalance.

- consumer group leader:

subscribe mode VS assign mode

先来看一段话

By having a notion of parallelism—the partition—within the topics, Kafka is able to provide both ordering guarantees and load balancing over a pool of consumer processes. This is achieved by assigning the partitions in the topic to the consumers in the consumer group so that **each partition is consumed by exactly one consumer in the group. **By doing this we ensure that the consumer is the only reader of that partition and consumes the data in order. Since there are many partitions this still balances the load over many consumer instances. **Note however that there cannot be more consumer instances in a consumer group than partitions. **

If we add more consumers to a single group with a single topic than we have partitions, some of the consumers will be idle and get no messages at all.

注意到这句话:however that there cannot be more consumer instances in a consumer group than partitions.

实际上这个是指的是assign mode下,同一个consumer group不能多个consumer来assign到同一个partition:

Properties props = new Properties(); props.put(ConsumerConfig.GROUP_ID_CONFIG, "MyConsumerGroup"); props.put("enable.auto.commit", "false"); consumer = new KafkaConsumer<>(props); TopicPartition partition0 = new TopicPartition("mytopic", 0); consumer.assign(Arrays.asList(partition0)); ConsumerRecords<Integer, String> records = consumer.poll(1000);如果一个consumer group启动多个consumer都选择这种assign模式,那么就会有问题,因为

It is important to recall that Kafka keeps one offset per [consumer-group, topic, partition]. That is the reason.

就是kafka自动维护的__consumer_offset是按照[consumer-group, topic, partition]来的[但是这里已经置为false了,所以应该没有问题,如果是true就有问题了,多个consumer读写同一个[consumer-group, topic, partition]就会有冲突]

而如果是使用subscribe mode,就会自动进行rebalance,如果同一个consumer group中的instance多于partition,那么没有问题,大不了consumer就idle或standby而已

Overview:

zookeeper 存储结构

图中不同topic的不同的partition可能位于同一个kafka broker或者不同的kafka broker,具体在哪里,完全可以去每个kafka节点下面寻找,路径:

/kafka/kafka-logs/<TOPIC-PARTITION>

# 2. Quick Start 安装配置

Quick start https://kafka.apache.org/quickstart

# 2.1 安装 install kafka

------------------------------------------------------------------------

--- Creating a User for Kafka

------------------------------------------------------------------------

sudo useradd kafka -m

sudo passwd kafka

for ubuntu: sudo adduser kafka sudo

for centos: sudo usermod -aG wheel kafka

su -l kafka

------------------------------------------------------------------------

-- Downloading and Extracting the Kafka Binaries

------------------------------------------------------------------------

mkdir ~/Downloads

curl "https://www.apache.org/dist/kafka/2.1.1/kafka_2.11-2.1.1.tgz" -o ~/Downloads/kafka.tgz

mkdir ~/kafka && cd ~/kafka

tar -xvzf ~/Downloads/kafka.tgz --strip 1 (We specify the --strip 1 flag to ensure that the archive’s contents are extracted in ~/kafka/ itself and not in another directory (such as ~/kafka/kafka_2.11-2.1.1/) inside of it)

------------------------------------------------------------------------

--- Configuring the Kafka Server

------------------------------------------------------------------------

vim ~/kafka/config/server.properties:

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

port=9092

host.name=X.X.X.48

advertised.host.name=X.X.X.48

advertised.port=9092

delete.topic.enable = true

log.retention.hours=168

log.dirs=/opt/kafka_2.12-2.2.0/kafka-logs

#外置zookeeper

zookeeper.connect=1.1.1.1:2181,1.1.1.2:2181,1.1.1.3:2181

------------------------------------------------------------------------

--- Option 1: Creating Systemd Unit Files and Starting the Kafka Server

------------------------------------------------------------------------

sudo vim /etc/systemd/system/zookeeper.service

[Unit]

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

User=kafka

ExecStart=/home/kafka/kafka/bin/zookeeper-server-start.sh /home/kafka/kafka/config/zookeeper.properties

ExecStop=/home/kafka/kafka/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target

The [Unit] section specifies that Zookeeper requires networking and the filesystem to be ready before it can start.

The [Service] section specifies that systemd should use the zookeeper-server-start.sh and zookeeper-server-stop.sh shell files for starting and stopping the service. It also specifies that Zookeeper should be restarted automatically if it exits abnormally.

sudo vim /etc/systemd/system/kafka.service

[Unit]

Requires=zookeeper.service

After=zookeeper.service

[Service]

Type=simple

User=kafka

ExecStart=/bin/sh -c '/home/kafka/kafka/bin/kafka-server-start.sh /home/kafka/kafka/config/server.properties > /home/kafka/kafka/kafka.log 2>&1'

ExecStop=/home/kafka/kafka/bin/kafka-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target

The [Unit] section specifies that this unit file depends on zookeeper.service. This will ensure that zookeeper gets started automatically when the kafka service starts.

The [Service] section specifies that systemd should use the kafka-server-start.sh and kafka-server-stop.sh shell files for starting and stopping the service. It also specifies that Kafka should be restarted automatically if it exits abnormally.

sudo systemctl start kafka

sudo journalctl -u kafka

sudo systemctl enable kafka

------------------------------------------------------------------------

--- Option 2: 编写启动脚本

------------------------------------------------------------------------

readonly PROGNAME=$(basename $0)

readonly PROGDIR=$(readlink -m $(dirname $0))

# source env

L_INVOCATION_DIR="$(pwd)"

L_CMD_DIR="/opt/scripts"

if [ "${L_INVOCATION_DIR}" != "${L_CMD_DIR}" ]; then

pushd ${L_CMD_DIR} &> /dev/null

fi

#source ../set_env.sh

#--------------- Function Definition ---------------#

showUsage() {

echo "Usage:"

echo "$0 kafka start|kill"

echo ""

echo "--start or -b: Start kafka"

echo "--kill or -k: Stop kafka"

}

#--------------- Main ---------------#

# Parse arguments

while [ "${1:0:1}" == "-" ]; do

case $1 in

--start)

L_FLAG="B"

;;

--kill)

L_FLAG="K"

;;

--status)

L_FLAG="S"

;;

*)

echo "Unknown option: $1"

echo ""

showUsage

echo ""

exit 1

;;

esac

shift

done

L_RETURN_FLAG=0 # 0 for success while 99 for failure

KAFKA_HOME=/opt/kafka_2.12-2.2.0/bin

ZK_CLUSTER=$HOST1:2181,$HOST2:2181,$HOST3:2181

pushd ${KAFKA_HOME} &>/dev/null

if [ "$L_FLAG" == "B" ]; then

echo "Starting kafka service..."

./kafka-server-start.sh -daemon ../config/server.properties

elif

echo "Stopping kafka service..."

./kafka-server-stop.sh -daemon ../config/server.properties

elif [ "$L_FLAG" == "S" ]; then

echo "Checking kafka status..."

arr=(${ZK_CLUSTER//","/ })

echo "${arr[@]}"

for ZK_NODE in "${arr[@]}";

do

echo "ZK_NODE: $ZK_NODE"

./zookeeper-shell.sh $ZK_NODE ls /brokers/ids

exit_code=$?

echo $exit_code

if [ "$exit_code" = "0" ]; then

exit 1

fi

done

fi

exit $L_RETURN_FLAG

注意:

如果是使用云上的DMS,zookeeper是不开放的,所以查询节点可以换用:

kafka/bin/kafka-broker-api-versions.sh --bootstrap-server "xxxx" | awk '/id/{print $1}'

------------------------------------------------------------------------

--- Restricting the Kafka User as a security precaution.

------------------------------------------------------------------------

This step in the prerequisite disables sudo access for the kafka user

for ubuntu:

sudo deluser kafka sudo

for centos:

sudo gpasswd -d kafka wheel

sudo passwd kafka -l (对应unlock:sudo passwd kafka -u)

sudo su - kafka

# 2.2 Config

https://kafka.apache.org/26/documentation/

https://docs.confluent.io/platform/current/installation/configuration

# 2.2.1 Broker/Server Config

# 通用配置

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

############################# Socket Server Settings #############################

port=9092

host.name=X.X.X.48

advertised.host.name=X.X.X.48

advertised.port=9092

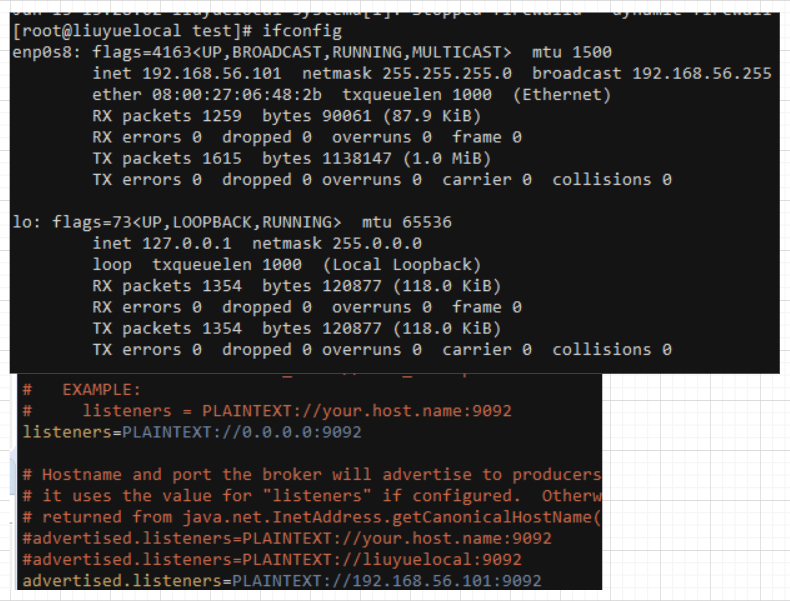

listeners = PLAINTEXT://your.host.name:9092

#advertised.listeners=PLAINTEXT://your.host.name:9092 //This is the metadata that’s passed back to clients.

listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

#Kafka brokers communicate between themselves, usually on the internal network (e.g., Docker network, AWS VPC, etc.). To define which listener to use, specify:

inter.broker.listener.name //https://cwiki.apache.org/confluence/display/KAFKA/KIP-103%3A+Separation+of+Internal+and+External+traffic

You need to set advertised.listeners (or KAFKA_ADVERTISED_LISTENERS if you’re using Docker images) to the external address (host/IP) so that clients can correctly connect to it. Otherwise, they’ll try to connect to the internal host address—and if that’s not reachable, then problems ensue.

https://stackoverflow.com/questions/42998859/kafka-server-configuration-listeners-vs-advertised-listeners

https://cwiki.apache.org/confluence/display/KAFKA/KIP-103%3A+Separation+of+Internal+and+External+traffic

https://cwiki.apache.org/confluence/display/KAFKA/KIP-291%3A+Separating+controller+connections+and+requests+from+the+data+plane

############################# Group Coordinator Settings #############################

# The following configuration specifies the time, in milliseconds, that the GroupCoordinator will delay the initial consumer rebalance.

# The rebalance will be further delayed by the value of group.initial.rebalance.delay.ms as new members join the group, up to a maximum of max.poll.interval.ms.

# The default value for this is 3 seconds.

# We override this to 0 here as it makes for a better out-of-the-box experience for development and testing.

# However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup.

group.initial.rebalance.delay.ms=0

还看到配置 scheduled.rebalance.max.delay.ms,

https://medium.com/streamthoughts/apache-kafka-rebalance-protocol-or-the-magic-behind-your-streams-applications-e94baf68e4f2

但是这好像是confluence提供的产品,并不是kafka默认的

############################# Log Retention Policy #############################

# The minimum age of a log file to be eligible for deletion due to age

log.retention.hours=336

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

#log.segment.bytes=1073741824

log.segment.bytes=2147483647

https://stackoverflow.com/questions/65507232/kafka-log-segment-bytes-vs-log-retention-hours

# 配置 external zookeeper

kafka配置:

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=1.1.1.1:2181,1.1.1.2:2181,1.1.1.3:2181

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

zookeeper配置:

This example is for a 3 node ensemble:

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/zookeeper-3.4.8/zkdata

dataLogDir=/zookeeper-3.4.8/logs

# the port at which the clients will connect

clientPort=2181

server.1=1.1.1.1:2888:3888

server.2=1.1.1.2:2888:3888

server.3=1.1.1.3:2888:3888

SERVER_JVMFLAGS=-Xmx1024m'

# listener

关于host

Kafka Listeners – Explained (opens new window)

测试 listener工具:

kafkacat:

https://github.com/edenhill/kafkacat

https://docs.confluent.io/platform/current/app-development/kafkacat-usage.html

kafkacat -b kafka0:9092 -Lpython scripts

https://github.com/lyhistory/kafka-listeners/blob/master/python/python_kafka_test_client.py

https://www.confluent.io/blog/kafka-client-cannot-connect-to-broker-on-aws-on-docker-etc/

nc

nc -vz 1.1.1.1 9092

# retention / delete

retention.ms 参数指定消息在 topic 中保留的时间,单位是毫秒。在指定的时间过去后,Kafka 会将该 topic 中旧的消息删除

delete.retention.ms 参数是在消息被删除后,要等待多长时间才能在磁盘上删除该消息的文件

cleanup.policy A string that is either "delete" or "compact" or both. This string designates the retention policy to use on old log segments. The default policy ("delete") will discard old segments when their retention time or size limit has been reached. The "compact" setting will enable log compaction on the topic.

Type: list Default: delete Valid Values: [compact, delete] Server Default Property: log.cleanup.policy Importance: medium

retention.ms This configuration controls the maximum time we will retain a log before we will discard old log segments to free up space if we are using the "delete" retention policy. This represents an SLA on how soon consumers must read their data. If set to -1, no time limit is applied.

Type: long Default: 604800000 (7 days) Valid Values: [-1,...] Server Default Property: log.retention.ms Importance: medium

delete.retention.ms The amount of time to retain delete tombstone markers for log compacted topics. This setting also gives a bound on the time in which a consumer must complete a read if they begin from offset 0 to ensure that they get a valid snapshot of the final stage (otherwise delete tombstones may be collected before they complete their scan).

Type: long Default: 86400000 (1 day) Valid Values: [0,...] Server Default Property: log.cleaner.delete.retention.ms Importance: medium

kafka至少会保留1个工作segment保存消息。消息量超过单个文件存储大小就会新建segment,比如消息量为2.6GB, 就会建立3个segment。kafka会定时扫描非工作segment,将该文件时间和设置的topic过期时间进行对比,如果发现过期就会将该segment文件(具体包括一个log文件和两个index文件)打上.deleted 的标记: kafka-logs/Topic-1/XXXXX.log.deleted 最后kafka中会有专门的删除日志定时任务过来扫描,发现.deleted文件就会将其从磁盘上删除,释放磁盘空间,至此kafka过期消息删除完成。

log.retention.ms: log.retention.ms parameter (default to 1 week). If set to -1, no time limit is applied.

log.retention.bytes: Its default value is -1, which allows for infinite retention. This means that if you have a topic with 8 partitions, and log.retention.bytes is set to 1 GB, the amount of data retained for the topic will be 8 GB at most. If you have specified both log.retention.bytes and log.retention.ms, messages may be removed when either criterion is met.

log.segment.bytes and log.segment.ms: As messages are produced to the Kafka broker, they are appended to the current log segment for the partition. Once the log segment has reached the size specified by the log.segment.bytes parameter (default 1 GB), the log segment is closed and a new one is opened. Only once a log segment has been closed, it can be considered for expiration (by log.retention.ms or log.retention.bytes).

Another way to control when log segments are closed is by using the log.segment.ms parameter, which specifies the amount of time after which a log segment should be closed. Kafka will close a log segment either when the size limit is reached or when the time limit is reached, whichever comes first.

A smaller log-segment size means that files must be closed and allocated more often, which reduces the overall efficiency of disk writes. Adjusting the size of the log segment can be important if topics have a low produce rate. For example, if a topic receives only 100 megabytes per day of messages, and log.segment.bytes is set to the default, it will take 10 days to fill one segment. As messages cannot be expired until the log segment is closed, if log.retention.ms is set to 1 week, they will actually be up to 17 days of messages retained until the closed segment expires. This is because once the log segment is closed with the current 10 days of messages, that log segment must be retained 7 days before it expires based on the time policy.

log.retention.check.interval.ms:default 5 minutes. So the broker log-segments are checked every 5 minutes to see if they can be deleted according to the retention policies.

topic1 configuration had retention policy set (retention.ms=60000), so if there was at least one existing message in an active segment of topic1, that segment would get closed and deleted if it was idle for long enough. Since log.retention.check.interval.ms is broker configuration, it's not affected by changes on the topic. Also retention.ms has to pass after the last message is produced to the segment. So after the last message is produced to that segment, segment will be deleted in not less than retention.ms milliseconds and not more than retention.ms+log.retention.check.interval.ms.

So the "segment of just 35 bytes, which contained just one message, was deleted after the minute (maybe a little more)" happened because retention check by chance happened almost immediately after the message was produced to that segment. Broker then had just to wait 60 seconds to be sure no new message will be produced to that segment (in which case deletion would't happen) and since there was none, it deleted the segment

https://stackoverflow.com/questions/41048041/kafka-deletes-segments-even-before-segment-size-is-reached

# 复制因子 replica factor 详解

很重要,对于普通的topic replica factor来说,replica多一些没有问题,但是对internal topic要特别注意,尤其是对于 __transaction_state来说,如果min.isr设置跟replication.factor设置一样,那么任何一个kafka节点down掉,都会造成无法写入kafka(transactional producer写入会报错 NotEnoughReplicasException)

https://stackoverflow.com/questions/47483016/recommended-settings-for-kafka-internal-topics-after-upgrade-to-1-0

############################# Internal Topic Settings #############################

# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

# For anything other than development testing, a value greater than 1 is recommended for to ensure availability such as 3.

offsets.topic.num.partitions = 50 (default)

offsets.topic.replication.factor=3

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=2

kafka-topics.sh -describe --bootstrap-server ip:9092 --topic __consumer_offsets

kafka-topics.sh -describe --bootstrap-server ip:9092 --topic __transaction_state

说明:

min.insync.replicas(default value=1) https://accu.org/journals/overload/28/159/kozlovski/ 同时控制external topic 以及internal topic

__consumer_offsets和__transaction_state,transaction.state.*只控制

__transaction_state(transaction.state.log.min.isr overriden min.insync.replicas),offsets.topic.replication.factor控制offsets topic也就是

__consumer_offsets,必须跟broker个数一致(小于等于,默认值为3,如果是两个节点就不行了,所以不要轻易使用默认值),否则无法启动default.replication.factor控制external topic(有时候称为automatically created topics,手动或自动创建auto.create.topics.enable默认是true)

场景:

if living/avaliable brokers < default.replication.factor 无法创建topic,报错:InvalidReplicationFactorException

if offsets.topic.replication.factor > brokers数量,~~kafka client无法启动(无法Discover group coordinator)~~应该是kafka sever无法正常创建internal topic consumer_offset, kafka server报错:

ERROR [KafkaApi-0] Number of alive brokers '2' does not meet the required replication factor '3' for the offsets topic (configured via 'offsets.topic.replication.factor'). This error can be ignored if the cluster is starting up and not all brokers are up yet. (kafka.server.KafkaApis)if default.replication.factor==节点数,比如: default.replication.factor=3 这样挂掉任何一个节点client都会报错:

2021-06-08 17:06:01.892 ^[[33m WARN^[[m ^[[35m23610GG^[[m [TEST-MANAGER] ^[[36mk.c.NetworkClient$DefaultMetadataUpdater^[[m : [Consumer clientId=consumer-1, groupId=TEST-SZL] 1 partitions have leader brokers without a matching listener, including [T-TEST-1]if (live isr 活着的节点中并且是isr的节点数) < transaction.state.log.min.isr:

[2021-06-09 09:31:14,285] ERROR [ReplicaManager broker=0] Error processing append operation on partition __transaction_state-28 (kafka.server.ReplicaManager) org.apache.kafka.common.errors.NotEnoughReplicasException: The size of the current ISR Set(0) is insufficient to satisfy the min.isr requirement of 2 for partition __transaction_state-28注意如果不停掉kafka producer程序,上述日志会快速的在kafka/logs/server.log 中刷入,潜在可能会造成磁盘问题

if living/avaliable brokers <min.insync.replicas && producer.properties.acks=all: producer报错 NotEnoughReplicasException

if (live isr 活着的节点中并且是isr的节点数) <min.insync.replicas of

__consumer_offsets:kafka consumer client discover group之后无法join group,在revoke之后,rejoining group停顿几分钟后狂刷日志:

2021-06-09 10:17:23.076 ^[[32m INFO^[[m ^[[35m26210GG^[[m [TEST-MANAGER] ^[[36mo.a.k.c.c.i.AbstractCoordinator^[[m : [Consumer clientId=consumer-1, groupId=TEST-REALTIME-SZL] Group coordinator XXXX:9092 (id: 2147483647 rack: null) is unavailable or invalid, will attempt rediscovery 2021-06-09 10:17:23.186 ^[[32m INFO^[[m ^[[35m26210GG^[[m [TEST-MANAGER] ^[[36mordinator$FindCoordinatorResponseHandler^[[m : [Consumer clientId=consumer-1, groupId=TEST-REALTIME-SZL] Discovered group coordinator XXXX:9092 (id: 2147483647 rack: null) 2021-06-09 10:17:23.187 ^[[32m INFO^[[m ^[[35m26210GG^[[m [TEST-MANAGER] ^[[36mo.a.k.c.c.i.AbstractCoordinator^[[m : [Consumer clientId=consumer-1, groupId=TEST-REALTIME-SZL] Group coordinator XXXX8:9092 (id: 2147483647 rack: null) is unavailable or invalid, will attempt rediscovery 2021-06-09 10:17:23.288 ^[[32m INFO^[[m ^[[35m26210GG^[[m [TEST-MANAGER] ^[[36mordinator$FindCoordinatorResponseHandler^[[m : [Consumer clientId=consumer-1, groupId=TEST-REALTIME-SZL] Discovered group coordinator XXXX:9092 (id: 2147483647 rack: null) 2021-06-09 10:17:23.289 ^[[32m INFO^[[m ^[[35m26210GG^[[m [TEST-MANAGER] ^[[36mo.a.k.c.c.i.AbstractCoordinator^[[m : [Consumer clientId=consumer-1, groupId=TEST-REALTIME-SZL] (Re-)joining group同时kafka server端狂刷日志:

[2021-06-09 10:18:43,146] INFO [GroupCoordinator 0]: Preparing to rebalance group TEST-REALTIME-SZL in state PreparingRebalance with old generation 393 (__consumer_offsets-49) (reaso n: error when storing group assignment during SyncGroup (member: consumer-1-0c90d042-0326-4cf2-a870-bb2ae055d140)) (kafka.coordinator.group.GroupCoordinator) [2021-06-09 10:18:43,349] INFO [GroupCoordinator 0]: Stabilized group TEST-REALTIME-SZL generation 394 (__consumer_offsets-49) (kafka.coordinator.group.GroupCoordinator) [2021-06-09 10:18:43,349] INFO [GroupCoordinator 0]: Assignment received from leader for group TEST-REALTIME-SZL for generation 394 (kafka.coordinator.group.GroupCoordinator) [2021-06-09 10:18:43,349] ERROR [ReplicaManager broker=0] Error processing append operation on partition __consumer_offsets-49 (kafka.server.ReplicaManager) org.apache.kafka.common.errors.NotEnoughReplicasException: The size of the current ISR Set(0) is insufficient to satisfy the min.isr requirement of 2 for partition __consumer_offsets- 49服务端borker节点上topic 正常状态(每个topic的partition的leader和replica状态)应该是:

[2022-03-16 15:55:15,899] TRACE [Controller id=0] Leader imbalance ratio for broker 2 is 0.0 (kafka.controller.KafkaController) [2022-03-16 15:55:15,899] DEBUG [Controller id=0] Topics not in preferred replica for broker 1 Map() (kafka.controller.KafkaController) [2022-03-16 15:55:15,899] TRACE [Controller id=0] Leader imbalance ratio for broker 1 is 0.0 (kafka.controller.KafkaController) [2022-03-16 15:55:15,899] DEBUG [Controller id=0] Topics not in preferred replica for broker 0 Map() (kafka.controller.KafkaController) [2022-03-16 15:55:15,899] TRACE [Controller id=0] Leader imbalance ratio for broker 0 is 0.0 (kafka.controller.KafkaController)但此时是非正常状态:

failed to complete preferred replica leader election [2022-03-16 10:58:02,837] ERROR [Controller id=1] Error completing preferred replica leader election for partition T-TRADE-1 (kafka.controller.KafkaController) kafka.common.StateChangeFailedException: Failed to elect leader for partition T-TRADE-1 under strategy PreferredReplicaPartitionLeaderElectionStrategy at kafka.controller.PartitionStateMachine.$anonfun$doElectLeaderForPartitions$9(PartitionStateMachine.scala:390) at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62) at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49) at kafka.controller.PartitionStateMachine.doElectLeaderForPartitions(PartitionStateMachine.scala:388) at kafka.controller.PartitionStateMachine.electLeaderForPartitions(PartitionStateMachine.scala:315) at kafka.controller.PartitionStateMachine.doHandleStateChanges(PartitionStateMachine.scala:225) at kafka.controller.PartitionStateMachine.handleStateChanges(PartitionStateMachine.scala:141) at kafka.controller.KafkaController.kafka$controller$KafkaController$$onPreferredReplicaElection(KafkaController.scala:649) at kafka.controller.KafkaController.$anonfun$checkAndTriggerAutoLeaderRebalance$6(KafkaController.scala:1008) at scala.collection.immutable.Map$Map3.foreach(Map.scala:195) at kafka.controller.KafkaController.kafka$controller$KafkaController$$checkAndTriggerAutoLeaderRebalance(KafkaController.scala:989) at kafka.controller.KafkaController$AutoPreferredReplicaLeaderElection$.process(KafkaController.scala:1020) at kafka.controller.ControllerEventManager$ControllerEventThread.$anonfun$doWork$1(ControllerEventManager.scala:94) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at kafka.metrics.KafkaTimer.time(KafkaTimer.scala:31) at kafka.controller.ControllerEventManager$ControllerEventThread.doWork(ControllerEventManager.scala:94) at kafka.utils.ShutdownableThread.run(ShutdownableThread.scala:82) [2022-03-16 10:58:02,837] WARN [Controller id=1] Partition T-TEST-SNP-2 failed to complete preferred replica leader election to 2. Leader is still 0 (kafka.controller.KafkaController) [2022-03-16 10:58:02,837] WARN [Controller id=1] Partition T-TEST-0 failed to complete preferred replica leader election to 2. Leader is still 0 (kafka.controller.KafkaController) [2022-03-16 10:58:02,838] INFO [Controller id=1] Partition T-TEST2-SNP-2 completed preferred replica leader election. New leader is 2 (kafka.controller.KafkaController) [2022-03-16 10:58:02,838] WARN [Controller id=1] Partition T-TEST2-1 failed to complete preferred replica leader election to 2. Leader is still 0 (kafka.controller.KafkaController)丢数据:min.insync.replicas=2 && unclean.leader.election.enable=true (It is default false) https://stackoverflow.com/questions/57277370/min-insync-replicas-vs-unclean-leader-election

# log dir不要用/tmp

# Consumer相关

# Producer相关

# 2.2.2 Client Config

# Consumer相关

# Producer相关

# 2.3 GUI & Commands

GUI:

- KafkaEsque https://kafka.esque.at

- https://github.com/airbnb/kafkat

命令:

/bin/*.sh - 单机命令 --broker-list

- 集群命令 --bootstrap-server

# 2.3.1 单机本地调试 Local

Start zookeeper

bin/zookeeper-server-start.sh config/zookeeper.properties

Start kafka server

bin/kafka-server-start.sh config/server.properties

bin/kafka-server-start.sh config/server-1.properties &

bin/kafka-server-start.sh config/server-2.properties &

config/server-1.properties:

broker.id=1

listeners=PLAINTEXT://:9093

log.dirs=/tmp/kafka-logs-1

Kafka CLI Tutorials (opens new window)

# Create topic

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 3 --partitions 1 --topic my-replicated-topic

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic mytopic

#You can use kafka-topics.sh to see how the Kafka topic is laid out among the Kafka brokers. The ---describe will show partitions, ISRs, and broker partition leadership.

[test@localhost kafka_2.12-2.2.0]$ bin/kafka-topics.sh --describe --bootstrap-server localhost:9092 --topic my-replicated-topic

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

Topic:my-replicated-topic PartitionCount:1 ReplicationFactor:3 Configs:segment.bytes=1073741824

Topic: my-replicated-topic Partition: 0 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0

# Reassign Partition

kafka-reassign-partitions.sh

-- 1.generate current assignment

kafka-reassign-partitions --zookeeper hostname:port --topics-to-move-json-file topics to move.json --broker-list broker 1, broker 2 --generate

-- 2.modify and apply

kafka-reassign-partitions --zookeeper hostname:port --reassignment-json-file reassignment configuration.json --bootstrap-server hostname:port --execute

-- 3.verify

kafka-reassign-partitions --zookeeper hostname:port --reassignment-json-file reassignment configuration.json --bootstrap-server hostname:port --verify

# Produce

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic my-replicated-topic

# for test

bin/kafka-verifiable-producer.sh --topic consumer-tutorial --max-messages 200000 --broker-list localhost:9092

# Consume

bin/kafka-topics.sh --list --zookeeper localhost:2181

bin/kafka-topics.sh --describe --zookeeper localhost:2181 --topic "ngs.svl.20220519.fib.result"

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --from-beginning --topic my-replicated-topic

bin/kafka-console-consumer.sh --bootstrap-server <你的kafka配置> --topic T-RISK --partition 0 --offset 3350 --max-messages 1

kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic first_topic --formatter kafka.tools.DefaultMessageFormatter --property print.timestamp=true --property print.key=true --property print.value=true --from-beginning

More properties are available such as:

print.partition

print.offset

print.headers

key.separator

line.separator

headers.separator

#inspect partition assignments and consumption progress

bin/kafka-consumer-groups.sh --new-consumer --describe --group consumer-tutorial-group --bootstrap-server localhost:9092

=>

shows all the partitions assigned within the consumer group, which consumer instance owns it, and the last committed offset (reported here as the “current offset”). The lag of a partition is the difference between the log end offset and the last committed offset.

#count total messages for a topic:

kafka-console-consumer.sh \

--from-beginning \

--bootstrap-server <BROKER:PORT> \

--property print.key=true \

--property print.value=false \

--property print.partition \

--topic <TOPIC_NAME> | tail -n 10|grep "Msg Count="

$ kafka-run-class.sh kafka.tools.GetOffsetShell \

--broker-list <HOST1:PORT,HOST2:PORT> \

--topic <TOPIC_NAME>

kafka-run-class.sh kafka.admin.ConsumerGroupCommand \

--group <GROUP_NAME> \

--bootstrap-server localhost:9092 \

--describe

kafka-run-class.sh kafka.tools.ConsumerOffsetChecker \

--topic <TOPIC_NAME> \

--zookeeper localhost:2181 \

--group <GROUP_NAME>

#!/usr/bin/bash

# Copyright (c) 2016 AsiaInvestment Pte. Ltd. Singapore

# All rights reserved.

BOOTS_STRAP_SERVER=127.0.0.1:9092

ZK_SERVER=127.0.0.1:2181

pushd /kafka_2.12-2.2.0/bin &>/dev/null

echo "#################################"

echo "### TOPICS"

echo "#################################"

topics=(`./kafka-topics.sh --list --zookeeper $ZK_SERVER | grep -v grep | awk '{print $1}'`)

for topic in ${topics[@]}

do

./kafka-topics.sh --describe --bootstrap-server $BOOTS_STRAP_SERVER --topic $topic

done

echo "#################################"

echo "### CONSUMER GROUP"

echo "#################################"

consumer_groups=(`./kafka-consumer-groups.sh --list --bootstrap-server $BOOTS_STRAP_SERVER | grep -v grep | awk '{print $1}'`)

for group in ${consumer_groups[@]}

do

echo " >>>group:$group<<<"

./kafka-consumer-groups.sh --describe --group $group --bootstrap-server $BOOTS_STRAP_SERVER

done

popd &>/dev/null

# dump log

./kafka-run-class.sh kafka.tools.DumpLogSegments --deep-iteration --print-data-log --files /tmp/kafka-logs/mftp1-0/00000000000000000000.log

# describe

/bin/kafka-configs.sh --describe --bootstrap-server "192.168.250.11:9092" --all --entity-type "brokers" --entity-name "0"

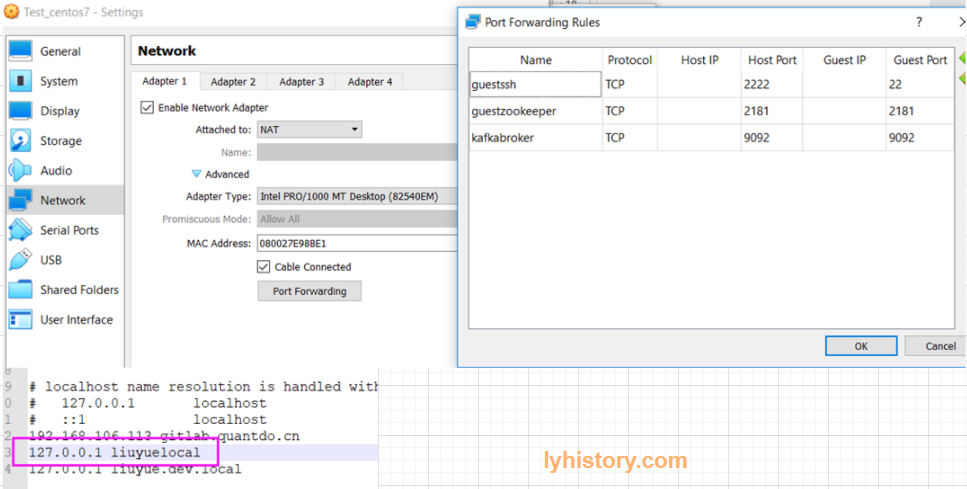

# 2.3.2 虚拟机远程调试 Remote

VM Host only mode

systemctl stop firewalld

Test from windows bat

.\kafka-console-producer.bat --broker-list x.x.x.x:9092 --topic test

C:\Workspace\Temp\kafka_2.12-2.2.1\bin\windows> .\kafka-console-consumer.bat --bootstrap-server x.x.x.x:9092 --from-beginning --topic test

Test from windows with python

pip install python-kafka

#python NO brokeravaialble

producer = KafkaProducer(bootstrap_servers=['x.x.x.x:9092'], api_version=(0,10))

#assert type(value_bytes) in (bytes, bytearray, memoryview, type(None)) AssertionError

producer.send('test', 'hi'.encode('utf-8'))

Failed on VM NAT mode

Someone succeed: https://boristyukin.com/connecting-to-kafka-on-virtualbox-from-windows/ But keep got: org.apache.kafka.common.errors.TimeoutException: Topic not present in metadata after 60000 ms

Port forward: 9092 Changed vm hostname And map on host machine

When producer/consumer accesses the Kafka broker, the Kafka broker returns its host name for data producer or consumer at default settings. So producers/consumers need to resolve broker's host name to IPAddress. For broker returning an arbitrary host name, use the advertised.listeners settings. listeners vs. advertised.listeners https://stackoverflow.com/questions/42998859/kafka-server-configuration-listeners-vs-advertised-listeners

Turned on log level to DEBUG

May be need to do something like: https://forums.virtualbox.org/viewtopic.php?f=1&t=29990 https://github.com/trivago/gollum/issues/93

Telnet 127.0.0.1/guesthost 9092 WORKS, but it doesn’t mean it can reach to the guest machine, simply because of port forwarding opened 9092 port on host

Final vm workaround (NAT+HOSTONLY) vim /etc/sysconfig/network-scripts/ifcfg-enp0s3

# 3. 管理维护 Maintain

https://kafka.apache.org/documentation/#operations

# 3.1 节点状态

------------------------------------------

--- zookeeper status

------------------------------------------

/zookeeper/bin/zkServer.sh status

------------------------------------------

--- 查看kafka broker节点

------------------------------------------

>/zookeeper/bin/zkCli.sh -server localhost:2181 #Make sure your Broker is already running

#ls /brokers/ids # Gives the list of active brokers

#ls /brokers/topics #Gives the list of topics

#get /brokers/ids/0 #Gives more detailed information of the broker id '0'

# 3.2 Backup (point-in-time snapshot) & Restore

为什么需要备份?

https://medium.com/@anatolyz/introducing-kafka-backup-9dc0677ea7ee

Replication handles many error cases but by far not all. What about the case that there is a bug in Kafka that deletes old data? What about a misconfiguration of the topic (are you sure, that your value of retention.ms is a millisecond value?)? What about an admin that accidentally deleted the whole Prod Cluster because they thought they were on dev? What about security breaches? If an attacker gets access to your Kafka Management interface, they can do whatever they like.

Of course, this does not matter too much if you are using Kafka to distribute click-streams data for your analytics department and it is tolerable to loose some data. But if you use Kafka as your “central nervous system” for your company and you store your core business data in Kafka you better think about a cold storage backup for your Kafka Cluster.

# 停机备份

https://www.digitalocean.com/community/tutorials/how-to-back-up-import-and-migrate-your-apache-kafka-data-on-ubuntu-18-04

单机版例子,集群类似,只是需要停掉所有的zookeeper和kafka,然后备份其中一台机器的zookeeper和kafka,然后在所有机器上恢复

sudo -iu kafka

~/kafka/bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic BackupTopic

echo "Test Message 1" | ~/kafka/bin/kafka-console-producer.sh --broker-list localhost:9092 --topic BackupTopic > /dev/null

~/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic BackupTopic --from-beginning

-----------------------------------------------------------------------------------

--- Backing Up the ZooKeeper State Data

-----------------------------------------------------------------------------------

kafka内置zookeeper:

ZooKeeper stores its data in the directory specified by the dataDir field in the /kafka/config/zookeeper.properties:

dataDir=/tmp/zookeeper

使用外置zookeeper:

/zookeeper/conf/zoo.cfg

dataDir=/opt/zookeeper-3.4.8/zkdata

dataLogDir=/opt/zookeeper-3.4.8/logs

compressed archive files are a better option over regular archive files to save disk space:

tar -czf /opt/kafka_backup/zookeeper-backup.tar.gz /opt/zookeeper-3.4.8/zkdata/*

忽略错误 tar: Removing leading `/' from member names

-----------------------------------------------------------------------------------

--- Backing Up the Kafka Topics and Messages

-----------------------------------------------------------------------------------

Kafka stores topics, messages, and internal files in the directory that the log.dirs field specifies

/kafka/config/server.properties:

log.dirs=/opt/kafka_2.12-2.2.0/kafka-logs

stop the Kafka service so that the data in the log.dirs directory is in a consistent state when creating the archive with tar

sudo systemctl stop kafka (前面安装时移除了kafka的sudo权限,需要使用其他有sudo权限的非root用户执行)

sudo -iu kafka

tar -czf /opt/kafka_backup/kafka-backup.tar.gz /opt/kafka_2.12-2.2.0/kafka-logs/*

sudo systemctl start kafka (同样切换其他用户)

sudo -iu kafka

-----------------------------------------------------------------------------------

--- Restoring the ZooKeeper Data & Kafka Data

-----------------------------------------------------------------------------------

You need to stop the Kafka and ZooKeeper services as a precaution against the data directories receiving invalid data during the restoration process.

sudo systemctl stop kafka

sudo systemctl stop zookeeper

sudo -iu kafka

rm -r /opt/zookeeper-3.4.8/zkdata/*

tar -C /opt/zookeeper-3.4.8/zkdata -xzf /opt/kafka_backup/zookeeper-backup.tar.gz --strip-components 2

(specify the --strip 2 flag to make tar extract the archive’s contents in /tmp/zookeeper/ itself and not in another directory (such as /tmp/zookeeper/tmp/zookeeper/) inside of it.)

rm -r /opt/kafka_2.12-2.2.0/kafka-logs/*

tar -C /opt/kafka_2.12-2.2.0/kafka-logs -xzf /opt/kafka_backup/kafka-backup.tar.gz --strip-components 2

sudo systemctl start kafka

sudo systemctl start zookeeper

sudo -iu kafka

-----------------------------------------------------------------------------------

--- Verifying the Restoration

-----------------------------------------------------------------------------------

~/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic BackupTopic --from-beginning

https://stackoverflow.com/questions/47791039/backup-restore-kafka-and-zookeeper/48337651

# 在线备份

Still no. You’re dealing with a distributed system. It’s not magic. Any attempt to trigger a snapshot ‘simultaneously’ across multiple hosts/disks is going to be subject to some small level of timing difference whether those are VMs managed by you in the Cloud or containers in K8s with persistent disks managed by the Cloud provider. It’d probably work in small scale tests, but break under significant production load.

https://www.reddit.com/r/apachekafka/comments/jb400p/kafka_backup_and_recovery/g8vkju7/

https://www.reddit.com/r/apachekafka/comments/g73nk9/how_to_take_full_backupsnapshot_of_kafka/

解决方案:

Support point-in-time backups :

提出需求:https://github.com/itadventurer/kafka-backup/issues/52

解决方案:

1)当前版本在一定场景下可以使用:

- Let Kafka Backup running in the background

- Kafka Backup writes data continuously in the background to the file system

kill -9Kafka Backup as soon as it is "finished", i.e. it finished writing your data. This should be promptly after you finished producing data- move the data of Kafka Backup to your new destination.

需要用到kafka自带的connect-standalone.sh 所以要配置环境变量

export PATH=$PATH:~/kafka/bin

backup:

sudo env "PATH=$PATH" backup-standalone.sh --bootstrap-server localhost:9092 --target-dir /path/to/backup/dir --topics 'topic1,topic2'

~/kafka/bin/kafka-topics.sh --bootstrap-server localhost:9092 --delete --topic topic1

~/kafka/bin/kafka-topics.sh --zookeeper localhost:2181 --delete --topic 'topic.*'

restore:

restore-standalone.sh --bootstrap-server localhost:9092 --target-dir /path/to/backup/dir --topics 'topic1,topic2'

~/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic BackupTopic --from-beginning

2)新增的支持 https://github.com/itadventurer/kafka-backup/pull/99 但是没有发布

https://www.confluent.io/blog/3-ways-prepare-disaster-recovery-multi-datacenter-apache-kafka-deployments/

# 3.3 re-partition?

注意:改变partition数量不会重新动态分配/迁移现有数据

Alter:

bin/kafka-topics.sh --bootstrap-server broker_host:port --alter --topic my_topic_name

--partitions 40

kafka-reassign-partitions命令是针对Partition进行重新分配,而不能将整个Topic的数据重新均衡到所有的Partition中。

https://segmentfault.com/a/1190000011721643

https://cloud.tencent.com/developer/article/1349448

Be aware that one use case for partitions is to semantically partition data, and adding partitions doesn't change the partitioning of existing data so this may disturb consumers if they rely on that partition. That is if data is partitioned by hash(key) % number_of_partitions then this partitioning will potentially be shuffled by adding partitions but Kafka will not attempt to automatically redistribute data in any way.

# 3.4 工具/日志排查

https://kafka.apache.org/documentation/#monitoring

# 系统排查

$ jps

17303 Jps

29819 Kafka

$ ll /proc/29819/fd

# 日志位置

/kafka/logs

controller.log.2021-03-01-00 kafka集群选举出的Controller负责通信 server.log.2021-03-01-00 具体负责某个partition replica的leader的日志以及负责某个consumer group的group coordinator日志 state-change.log.2021-03-01 记录topic partition的online offline等状态信息

kafkaServer.out跟server.log一样,只是会定期archive到server.log

/kafka/kafka-logs

__consumer_offsets-0/

__transaction_state-0/

[TOPIC]-[PARTITION]/

# kafka server端日志解析

---------------------------------------------------------------------------

--- create/delete topic, from controller.log

1)这个是命令创建的

./kafka-topics.sh --create --bootstrap-server $KFK_CLUSTER --replication-factor 2 --partitions 3 --topic T-XXX

replica是2,所以对应两个broker,所以后面还要选一个prefer replica作为leader

[2021-04-14 11:04:11,239] INFO [Controller id=0] New topics: [Set(T-XXX)], deleted topics: [Set()], new partition replica assignment [Map(T-XXX-2 -> Vector(3, 0), T-XXX-1 -> Vector(0, 1), T-XXX-0 -> Vector(1, 3))] (kafka.controller.KafkaController)

--- elect TOPIC-PARTITION replica leader

INFO [Controller id=0] Partition T-XXX-2 completed preferred replica leader election. New leader is 3 (kafka.controller.KafkaController)

2)下面这个内部topic是何时被创建的(更准确的应该是说其各个分区是何时创建的)

https://cloud.tencent.com/developer/news/19958

__consumer_offsets创建的时机有很多种,主要包括:

broker响应FindCoordinatorRequest请求时

broker响应MetadataRequest显式请求__consumer_offsets元数据时

其中以第一种最为常见,而第一种时机的表现形式可能有很多,比如用户启动了一个消费者组(下称consumer group)进行消费或调用kafka-consumer-groups --describe等

注意,各自分区都是对应到一个broker,所以consumer group也就是直接对应到了相应的broker(group coordinator)

[2021-04-14 11:14:20,601] INFO [Controller id=0] New topics: [Set(__consumer_offsets)], deleted topics: [Set()], new partition replica assignment [Map(__consumer_offsets-22 -> Vector(3), __consumer_offsets-30 -> Vector(1), __consumer_offsets-8 -> Vector(0), __consumer_offsets-21 -> Vector(1), __co

对应到项目代码应该就是消费组 consumer group启动的时候

3)这个是代码创建的:

auto.create.topics.enable=true,代码读取T-XXX-SNP就会创建:

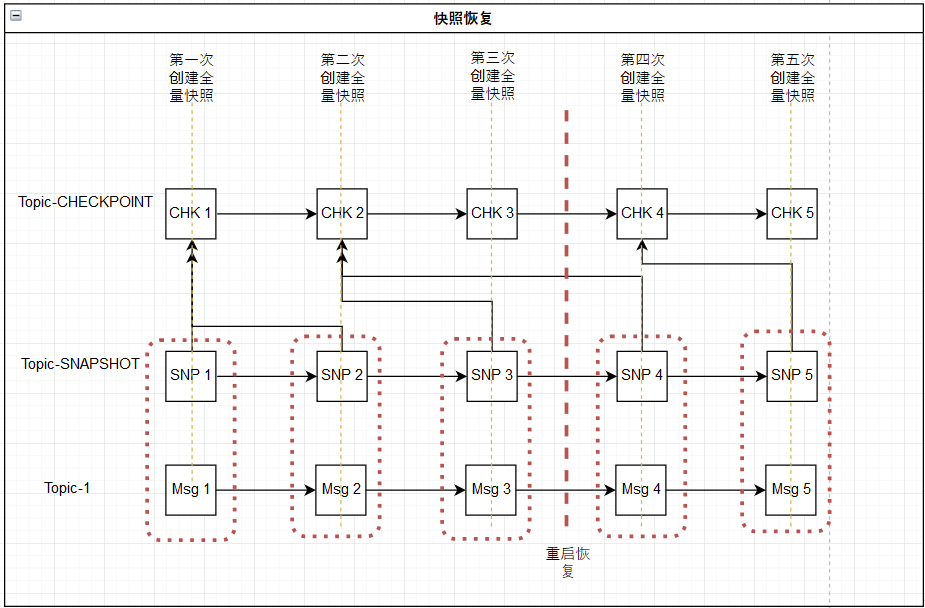

SNP就是我们后面会提到的所谓自己维护的增量快照

默认用了1个replica,所以直接就对应某个broker

[2021-04-14 11:14:27,347] INFO [Controller id=0] New topics: [Set(T-XXX-SNP)], deleted topics: [Set()], new partition replica assignment [Map(T-sss-SNP-2 -> Vector(3), T-XXX-SNP-1 -> Vector(1), T-XXX-SNP-0 -> Vector(0))] (kafka.controller.KafkaController)

[2021-04-14 11:14:27,347] INFO [Controller id=0] New partition creation callback for T-XXX-SNP-2,T-XXX-SNP-1,T-XXX-SNP-0 (kafka.controller.KafkaController)

最后变成一张broker map:

[2021-04-14 11:18:36,979] DEBUG [Controller id=0] Preferred replicas by broker Map(1 -> Map(T-JOB-SNP-0 -> Vector(1), __consumer_offsets-27 -> Vector(1), T-TEST-1 -> Vector(1, 0), __transaction_state-2 -> Vector(1, 3), __transaction_state-20 -> Vector(1, 3), __consumer_offsets-33 -> Vector(1), T-DBMS-SNP-0 -> Vector(1), T-CAPTURE-0 -> Vector(1, 0), __consumer_offsets-36 -> Vector(1), __transaction_state-29 -> Vector(1, 0), __consumer_offsets-42 -> Vector(1), __consumer_offsets-3 -> Vector(1), __consumer_offsets-18 -> Vector(1), __transaction_state-38 -> Vector(1, 3), T-TEST-SNP-2 -> Vector(1, 3), T-MEMBER-1 -> Vector(1, 0), __consumer_offsets-15 -> Vector(1), __consumer_offsets-24 -> Vector(1), T-EOD-1 -> Vector(1, 0), T-QUOTATION-2 -> Vector(1, 0), __transaction_state-14 -> Vector(1, 3), __transaction_state-44 -> Vector(1, 3), T-RISK-0 -> Vector(1, 3), T-RISK-SNP-2 -> Vector(1), __transaction_state-32 -> Vector(1, 3), __consumer_offsets-48 -> Vector(1), T-CAPTURE-SNP-1 -> Vector(1), T-TEST-0 -> Vector(1, 0), T-EOD-SNP-1 -> Vector(1), __transaction_state-17 -> Vector(1, 0), __transaction_state-23 -> Vector(1, 0), __transaction_state-47 -> Vector(1, 0), __consumer_offsets-6 -> Vector(1), T-QUOTATION-SNP-1 -> Vector(1), __transaction_state-26 -> Vector(1, 3), T-JOB-2 -> Vector(1, 0), __transaction_state-5 -> Vector(1, 0), __transaction_state-8 -> Vector(1, 3)

---------------------------------------------------------------------------

--- elect Controller from controller.log

[2021-04-14 10:53:31,013] DEBUG [Controller id=1] Broker 0 has been elected as the controller, so stopping the election process. (kafka.controller.KafkaController)

---------------------------------------------------------------------------

--- rebalance by group coordinator broker 3,from server.log or kafkaServer.out

[2021-04-15 08:24:20,809] INFO [GroupCoordinator 3]: Preparing to rebalance group XXXX-SZL in state PreparingRebalance with old generation 0 (__consumer_offsets-28) (reason: Adding new member consumer-1-11aac9d7-8a72-44fe-bf5c-0519941bbb6a) (kafka.coordinator.group.GroupCoordinator)

[2021-04-15 08:24:20,811] INFO [GroupCoordinator 3]: Stabilized group XXX-SZL generation 1 (__consumer_offsets-28) (kafka.coordinator.group.GroupCoordinator)

[2021-04-15 08:24:20,823] INFO [GroupCoordinator 3]: Assignment received from leader for group XXXX-SZL for generation 1 (kafka.coordinator.group.GroupCoordinator)

[2021-04-15 08:24:22,543] INFO [TransactionCoordinator id=3] Initialized transactionalId XXX-TID-0 with producerId 1004 and producer epoch 1 on partition __transaction_state-15 (kafka.coordinator.transaction.TransactionCoordinator)

[2021-04-15 08:24:23,957] INFO [GroupCoordinator 3]: Preparing to rebalance group XXXX-SZL in state PreparingRebalance with old generation 0 (__consumer_offsets-49) (reason: Adding new member consumer-1-416c9379-0f89-48f4-b125-eadf648d57c7) (kafka.coordinator.group.GroupCoordinator)

[2021-04-15 08:24:23,959] INFO [GroupCoordinator 3]: Stabilized group XXXXE-SZL generation 1 (__consumer_offsets-49) (kafka.coordinator.group.GroupCoordinator)

[2021-04-15 08:24:23,972] INFO [GroupCoordinator 3]: Assignment received from leader for group XXX-SZL for generation 1 (kafka.coordinator.group.GroupCoordinator)

[2021-04-15 08:24:25,524] INFO [TransactionCoordinator id=3] Initialized transactionalId XXXX-TID-0 with producerId 1005 and producer epoch 1 on partition __transaction_state-18 (kafka.coordinator.transaction.TransactionCoordinator)

---------------------------------------------------------------------------

--- closed kafka client, from server.log or kafkaServer.out

[2021-04-16 17:51:05,705] INFO [GroupCoordinator 3]: Member consumer-1-416c9379-0f89-48f4-b125-eadf648d57c7 in group TEST-REALTIME-SZL has failed, removing it from the group (kafka.coordinator.group.GroupCoordinator)

[2021-04-16 17:51:05,707] INFO [GroupCoordinator 3]: Preparing to rebalance group TEST-REALTIME-SZL in state PreparingRebalance with old generation 1 (__consumer_offsets-49) (reason: removing member consumer-1-416c9379-0f89-48f4-b125-eadf648d57c7 on heartbeat expiration) (kafka.coordinator.group.GroupCoordinator)

客户端consumer group所有的consumer都停掉了,所以是empty,然后整个group宣布dead

[2021-04-16 17:51:05,707] INFO [GroupCoordinator 3]: Group XXX-SZL with generation 2 is now empty (__consumer_offsets-49) (kafka.coordinator.group.GroupCoordinator)

[2021-04-16 17:53:56,956] INFO [GroupMetadataManager brokerId=3] Group XXX-SZL transitioned to Dead in generation 2 (kafka.coordinator.group.GroupMetadataManager)

---------------------------------------------------------------------------

---shutdown kafka borker 3, from server.log or kafkaServer.out

[2021-04-15 08:59:31,965] INFO Terminating process due to signal SIGTERM (org.apache.kafka.common.utils.LoggingSignalHandler)

[2021-04-15 08:59:31,973] INFO [KafkaServer id=3] shutting down (kafka.server.KafkaServer)

[2021-04-15 08:59:31,977] INFO [KafkaServer id=3] Starting controlled shutdown (kafka.server.KafkaServer)

[2021-04-15 08:59:32,066] INFO [ReplicaFetcherManager on broker 3] Removed fetcher for partitions Set(__transaction_state-45, __transaction_state-27, __transaction_state-9, T-XXX-2, T-XXX-1, __transaction_state-39, __transaction_state-36, ...... __transaction_state-0) (kafka.server.ReplicaFetcherManager)

--------------------------------------------------------

--- consumer subscribe topic 引起的rebalance

[2022-03-12 18:33:00,630] INFO [GroupCoordinator 3]:

Preparing to rebalance group TEST-TRADEFRONT-SZL in state PreparingRebalance with old generation 0 (__consumer_offsets-43)

(reason: Adding new member consumer-2-808a151c-06dd-4df8-83fc-8b1b15a53a4d) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:33:00,631] INFO [GroupCoordinator 3]:

Stabilized group TEST-TRADEFRONT-SZL generation 1 (__consumer_offsets-43) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:33:00,634] INFO [GroupCoordinator 3]:

Assignment received from leader for group TEST-TRADEFRONT-SZL for generation 1 (kafka.coordinator.group.GroupCoordinator)

-----------------------------------------------------

--- consumer removed

[2022-03-12 18:41:58,862] INFO [GroupCoordinator 3]:

Member consumer-2-808a151c-06dd-4df8-83fc-8b1b15a53a4d in group TEST-TRADEFRONT-SZL has failed, removing it from the group (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:41:58,862] INFO [GroupCoordinator 3]:

Preparing to rebalance group TEST-TRADEFRONT-SZL in state PreparingRebalance with old generation 1 (__consumer_offsets-43)

(reason: removing member consumer-2-808a151c-06dd-4df8-83fc-8b1b15a53a4d on heartbeat expiration) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:41:58,863] INFO [GroupCoordinator 3]:

Group TEST-TRADEFRONT-SZL with generation 2 is now empty (__consumer_offsets-43) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:43:22,158] INFO [GroupCoordinator 3]:

Preparing to rebalance group TEST-TRADEFRONT-SZL in state PreparingRebalance with old generation 2 (__consumer_offsets-43)

(reason: Adding new member consumer-2-aedafa05-22cb-4130-8e91-927c99f3fd06) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:43:22,159] INFO [GroupCoordinator 3]:

Stabilized group TEST-TRADEFRONT-SZL generation 3 (__consumer_offsets-43) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:43:22,162] INFO [GroupCoordinator 3]:

Assignment received from leader for group TEST-TRADEFRONT-SZL for generation 3 (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:46:57,292] INFO [GroupMetadataManager brokerId=3] Removed 0 expired offsets in 0 milliseconds. (kafka.coordinator.group.GroupMetadataManager)

[2022-03-12 18:54:50,418] INFO [GroupCoordinator 3]:

Member consumer-2-aedafa05-22cb-4130-8e91-927c99f3fd06 in group TEST-TRADEFRONT-SZL has failed, removing it from the group (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:54:50,418] INFO [GroupCoordinator 3]:

Preparing to rebalance group TEST-TRADEFRONT-SZL in state PreparingRebalance with old generation 3 (__consumer_offsets-43) (reason: removing member consumer-2-aedafa05-22cb-4130-8e91-927c99f3fd06 on heartbeat expiration) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:54:50,418] INFO [GroupCoordinator 3]:

Group TEST-TRADEFRONT-SZL with generation 4 is now empty (__consumer_offsets-43) (kafka.coordinator.group.GroupCoordinator)

[2022-03-12 18:56:57,292] INFO [GroupMetadataManager brokerId=3]

Group TEST-TRADEFRONT-SZL transitioned to Dead in generation 4 (kafka.coordinator.group.GroupMetadataManager)

Reasons:

A consumer left the group (clean shut down)

A consumer seems to be dead in the view of Kafka

https://stackoverflow.com/questions/54183045/unexpected-failing-rebalancing-of-consumers

----Shrinking ISR from

代表有broker节点失联或挂掉

----Expanding ISR from

代码broker节点恢复或新增节点

----Will not attempt to authenticate using SASL (unknown error)

kafka server端无法连接zookeeper或者连接异常

# kafka client端日志解析

---------------------------------------------------------------------------

--- metadata

this.kafkaConsumer.partitionsFor(context.getConfig().getTaskTopic())

=>

2021-04-01 14:37:00.622 INFO 32380GG [main] o.a.k.c.Metadata : Cluster ID: uEekh0baSnKon5ENwtY9dg

consumer.endOffsets(Collections.singleton(topicPartition)).get(topicPartition)

=>

2021-04-01 14:37:51.146 INFO 32380GG [RKER-RECOVERY-2] o.a.k.c.Metadata : Cluster ID: uEekh0baSnKon5ENwtY9dg

或

2021-03-27 15:40:29 395-[org.apache.kafka.clients.Metadata.update(Metadata.java:365)]-[INFO] Cluster ID: pjnHKkklRtuSQjVDsUbgVw

---------------------------------------------------------------------------

--- subscribe to topic or to topic|partition

this.kafkaConsumer.subscribe(Collections.singleton(context.getConfig().getTaskTopic()), new SimpleWorkBalancer(context.getRestorer(), this::removeWorker, this::addWorker));

=>

2021-04-01 14:37:00.639 INFO 32380GG [main] o.a.k.c.c.KafkaConsumer : [Consumer clientId=consumer-1, groupId=XXXX-SZL] Subscribed to topic(s): T-XXXX

consumer.assign(Collections.singleton(topicPartition));

=>

2021-04-01 14:38:09.783 INFO 32380GG [RKER-RECOVERY-2] o.a.k.c.c.KafkaConsumer : [Consumer clientId=consumer-2, groupId=RESTORE-1] Subscribed to partition(s): T-XXXX-SNP-1

---------------------------------------------------------------------------

--- Discover group

consumer.poll(Duration.ofMillis(10_000L));

=>

如果是assign mode,如果前面没有调用endOffsets之类获取metadata,此时会打印(估计跟consumer.seek(topicPartition, checkpointOffset);有关,当然如果之前调用过就会在调用时打印,此时不会打印):

2021-04-01 15:56:50.755 INFO 22064GG [RKER-RECOVERY-1] o.a.k.c.Metadata : Cluster ID: uEekh0baSnKon5ENwtY9dg

然后打印

2021-04-01 14:37:40.379 INFO 32380GG [XXXX-MANAGER] ordinator$FindCoordinatorResponseHandler : [Consumer clientId=consumer-1, groupId=XXX-SZL] Discovered group coordinator 1.1.1.1:9092 (id: 2147483647 rack: null)

2021-04-01 14:38:41.369 INFO 32380GG [RKER-RECOVERY-2] ordinator$FindCoordinatorResponseHandler : [Consumer clientId=consumer-2, groupId=RESTORE-1] Discovered group coordinator 1.1.1.1:9092 (id: 2147483647 rack: null)

如果触发了rebalance,则接着打印

2021-03-31 08:59:01.727 INFO 20080GG [XXX-MANAGER] o.a.k.c.c.i.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=TEST-PRICEENGINE-SZL] (Re-)joining group

2021-03-31 08:59:01.904 INFO 20080GG [XXX-MANAGER] o.a.k.c.c.i.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=TEST-PRICEENGINE-SZL] (Re-)joining group

2021-03-31 08:59:04.122 INFO 20080GG [XXX-MANAGER] o.a.k.c.c.i.AbstractCoordinator$1 : [Consumer clientId=consumer-1, groupId=TEST-PRICEENGINE-SZL] Successfully joined group with generation 10

---------------------------------------------------------------------------

--- todo

Leader imbalance ratio for broker 3 is 0.0

https://stackoverflow.com/questions/57475580/whats-the-difference-between-kafka-preferred-replica-election-sh-and-auto-leade

# kafka 常见异常Exceptions

Kafka常见错误整理 https://cloud.tencent.com/developer/article/1508919

--- NotEnoughReplicasException

The size of the current ISR Set(0) is insufficient to satisfy the min.isr requirement

https://stackoverflow.com/questions/62770272/notenoughreplicasexception-the-size-of-the-current-isr-set2-is-insufficient-t

例如:对于producer来说就是

The size of the current ISR Set(0) is insufficient to satisfy the min.isr requirement of 2 for partition __transaction_state-

对应client端的错误日志为:

java.lang.reflect.UndeclaredThrowableException: null

Caused by: org.apache.kafka.common.errors.TimeoutException: Timeout expired while initializing transactional state in 60000ms.

--- LEADER_NOT_AVAILABLE:

topic 可能不存在,kafka api默认会自动创建

--- offset commit failed on partition this is not the correct coordinator

--- Offset commit failed on partition xxx at offset 957: The coordinator is not aware of this member

https://www.cnblogs.com/chuijingjing/p/12797035.html

--- topic not presetn in metadata after 6000ms

partition 可能不存在或者是其他问题,比如

https://blog.csdn.net/bay_bai/article/details/104799498

https://github.com/wurstmeister/kafka-docker/issues/553

--- Connection to node -1 could not be established. Broker may not be available.

listener设置不对

https://blog.csdn.net/Mr_hou2016/article/details/79484032

--- Connection to node -2 could not be established. Broker may not be available.

--- org.apache.kafka.common.errors.TimeoutException: Failed to get offsets by times in 30000ms

endOffsets()->fetchOffsetsByTimes

--- UNKNOWN_MEMBER_ID

Attempt to heartbeat failed for since member id consumer-1-c4ff67d3-b776-4994-9179-4a19f9ff87a6 is not valid

可能1:如果当前 group 的状态为 Dead,则说明对应的 group 不再可用,或者已经由其它 GroupCoordinator 实例管理,直接响应 UNKNOWN_MEMBER_ID 错误,消费者可以再次请求获取新接管的 GroupCoordinator 实例所在的位置信息。

可能2:消费者会在轮询获取消息或提交偏移量时发送心跳,如果消费者停止发送心跳的时间足够长,会话就会过期,组协调器认为它已经死亡,就会触发一次再均衡,至于原因,有可能是:

一般来说producer的生产消息的逻辑速度都会比consumer的消费消息的逻辑速度快,当producer在短时间内产生大量的数据丢进kafka的broker里面时,可能出现类似错误:Offset commit failed on partition : The coordinator is not aware of this member.

1) kafka的consumer会从broker里面取出一批数据,给消费线程进行消费;

2) 由于取出的一批消息数量太大,consumer在session.timeout.ms时间之内没有消费完成;

3) consumer coordinator 会由于没有接受到心跳而挂掉;

4) 由于自动提交offset失败,reblance之后又重新消费之前的一批数据(offset提交失败),恶性循环,越积越多;

- https://www.cnblogs.com/chuijingjing/p/12797035.html

--- Group coordinator is unavailable or invalid

Group coordinator x.x.x.x:9092 (id: 2147483647 rack: null) is unavailable or invalid, will attempt rediscovery

--- CommitFailedException

If a simple consumer(assign mode) tries to commit offsets with a group id which matches an active consumer group, the coordinator will reject the commit (which will result in a CommitFailedException). However, there won’t be any errors if another simple consumer instance shares the same group id.

--- INVALID_FETCH_SESSION_EPOCH.

Node 1 was unable to process the fetch request with (sessionId=1972558084, epoch=904746): INVALID_FETCH_SESSION_EPOCH.

--- UnkownProducerIdException

基本原因就是producer创建后超过 retention expire 过期时间或者大小,所以被清理,kafka服务端向客户端报错后会立即重新注册该producer,所以最好的处理办法是callback中重试

https://stackoverflow.com/questions/61084031/how-to-handle-unkownproduceridexception/69999568#69999568

2021-11-16 09:08:29.206 [31mERROR[m [35m5527GG[m [ad | producer-1] [36mo.a.k.c.p.i.Sender[m : [Producer clientId=producer-1] The broker returned org.apache.kafka.common.errors.UnknownProducerIdException: This exception is raised by the broker if it could not locate the producer metadata associated with the producerId in question. This could happen if, for instance, the producer's records were deleted because their retention time had elapsed. Once the last records of the producerId are removed, the producer's metadata is removed from the broker, and future appends by the producer will return this exception. for topic-partition T-TEST-1 at offset -1. This indicates data loss on the broker, and should be investigated.

2021-11-16 09:08:29.207 [32m INFO[m [35m5527GG[m [ad | producer-1] [36mo.a.k.c.p.i.TransactionManager[m : [Producer clientId=producer-1] ProducerId set to -1 with epoch -1

2021-11-16 09:08:29.219 [32m INFO[m [35m5527GG[m [ad | producer-1] [36mo.a.k.c.p.i.TransactionManager[m : [Producer clientId=producer-1] ProducerId set to 35804 with epoch 0

# kafka-log-dirs.sh

./bin/kafka-log-dirs.sh --describe --bootstrap-server hostname:port --broker-list broker 1, broker 2 --topic-list topic 1, topic 2

# kafka-dump-log.sh

KAFKA Internal consumer topic log:

./bin/kafka-dump-log.sh --files ./kafka-logs/T-TOPIC-1/00000000000000000192.log --print-data-log

# kafka-console-consumer.sh

KAFKA Internal offset topic: __consumer_offsets:

#Create consumer config

echo "exclude.internal.topics=false" > /tmp/consumer.config

#Consume all offsets

./kafka-console-consumer.sh --consumer.config /tmp/consumer.config \

--formatter "kafka.coordinator.group.GroupMetadataManager\$OffsetsMessageFormatter" \

--bootstrap-server localhost:9092 --topic __consumer_offsets --from-beginning

KAFKA Internal transaction log:

暂时没找到方法看,参考

You can look to source code of

TransactionLogMessageParserclass insidekafka/tools/DumpLogSegments.scalafile as an example. It usesreadTxnRecordValuefunction fromTransactionLogclass. The first argument for this function could be retrieved viareadTxnRecordKeyfunction of the same class.https://stackoverflow.com/questions/47670477/reading-data-from-transaction-state-topic-in-kafka-0-11-0-1

KAFKA Internal transaction topic: __transaction_state

echo "exclude.internal.topics=false" > consumer.config

./bin/kafka-console-consumer.sh --consumer.config consumer.config --formatter "kafka.coordinator.transaction.TransactionLog\$TransactionLogMessageFormatter" --bootstrap-server x.x.x.x:9092,X.X.X.46:9092,X.X.X.47:9092 --topic __transaction_state --from-beginning

# 3.5 Performance metric monitoring

Benchmarking Apache Kafka: 2 Million Writes Per Second (On Three Cheap Machines) (opens new window)

Apache Kafka® Performance (opens new window)

Monitoring Kafka performance metrics (opens new window)

scripting approach to run performance tests (opens new window)

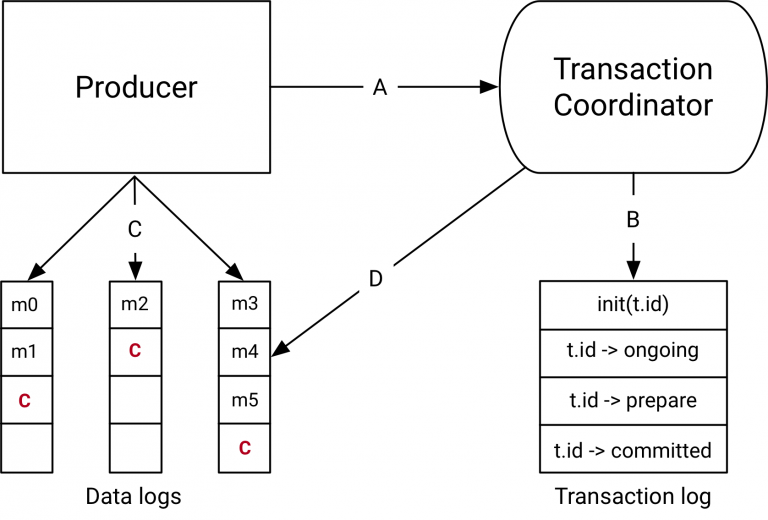

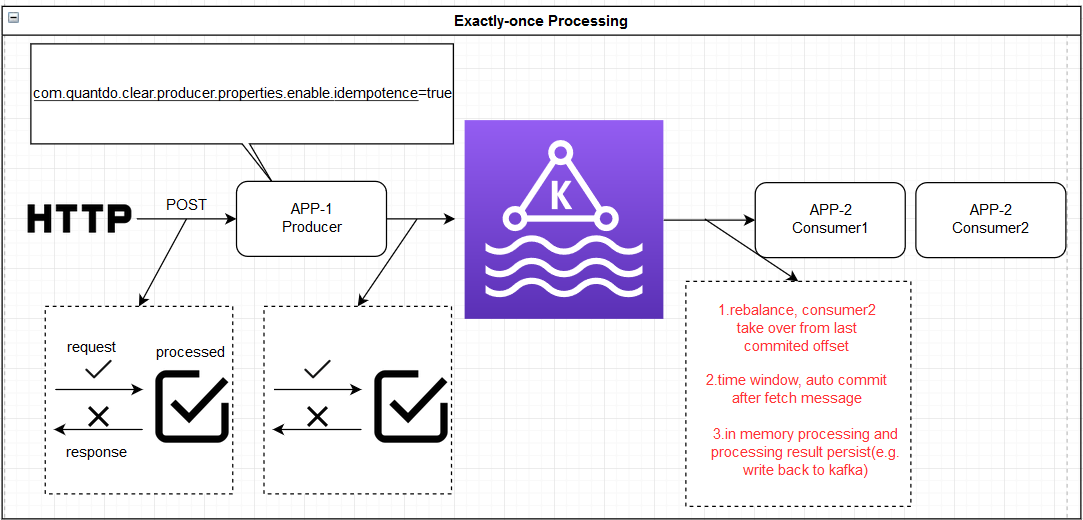

# 4. Exactly-Once 一致性语义

# 4.1 Exactly-Once-Message-Processing

there are only two hard problems in distributed systems:

- Guaranteed order of messages

- Exactly-once delivery

https://www.confluent.io/online-talk/introducing-exactly-once-semantics-in-apache-kafka/

https://www.confluent.io/blog/transactions-apache-kafka/

https://cwiki.apache.org/confluence/display/KAFKA/KIP-98+-+Exactly+Once+Delivery+and+Transactional+Messaging

https://blog.csdn.net/alex_xfboy/article/details/82988259

KIP-129: Streams Exactly-Once Semantics https://cwiki.apache.org/confluence/display/KAFKA/KIP-129%3A+Streams+Exactly-Once+Semantics

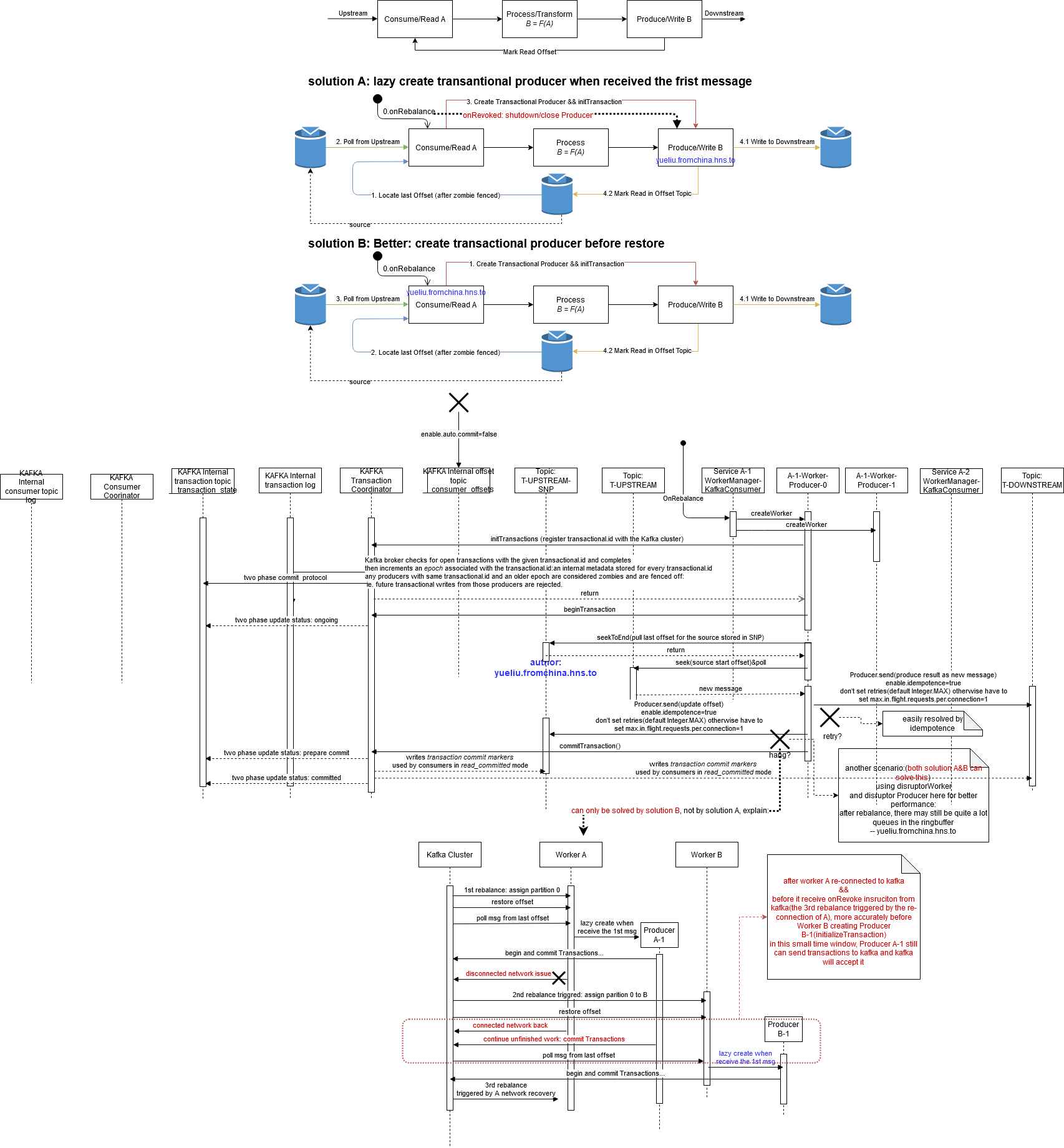

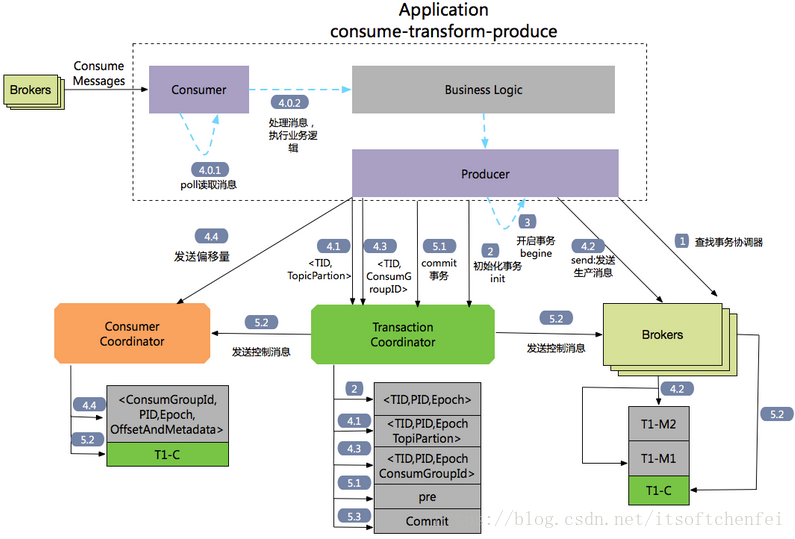

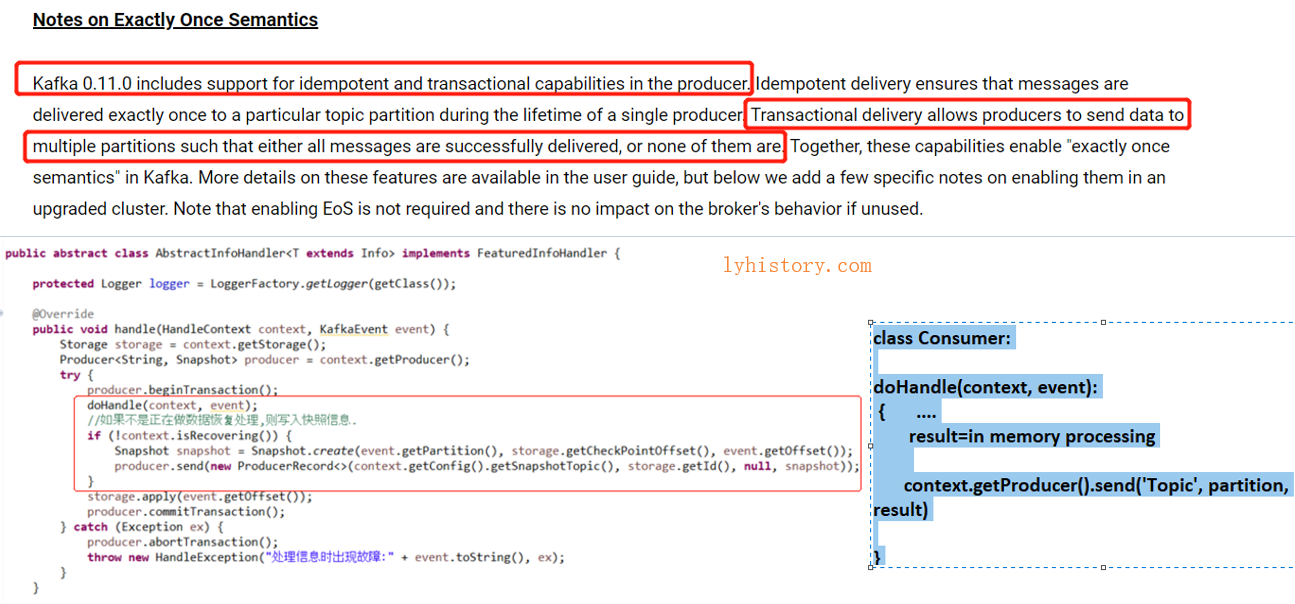

重点:

Producer:

开启幂等enable.idempotence和事务 transactional.id,并维护offset

Consumer:

设置isolation.level=read_committed(配合事务型Producer)

跟 kafka borker交互:

consumer根据kafka broker的rebalance来为每个partition创建producer,事务型 producer通过initTransaction操作来fence zombie(仍然是依靠kafka broker),从而屏蔽掉其他过时的producer(rebalance过程中被收回了partition的consumer之前所创建的producer)消费消息的可能性,然后consumer可以放心的restore

The first generation of stream processing applications could tolerate inaccurate processing. For instance, applications which consumed a stream of web page impressions and produced aggregate counts of views per web page could tolerate some error in the counts.

However, the demand for stream processing applications with stronger semantics has grown along with the popularity of these applications. For instance, some financial institutions use stream processing applications to process debits and credits on user accounts. In these situations, there is no tolerance for errors in processing: we need every message to be processed exactly once, without exception.

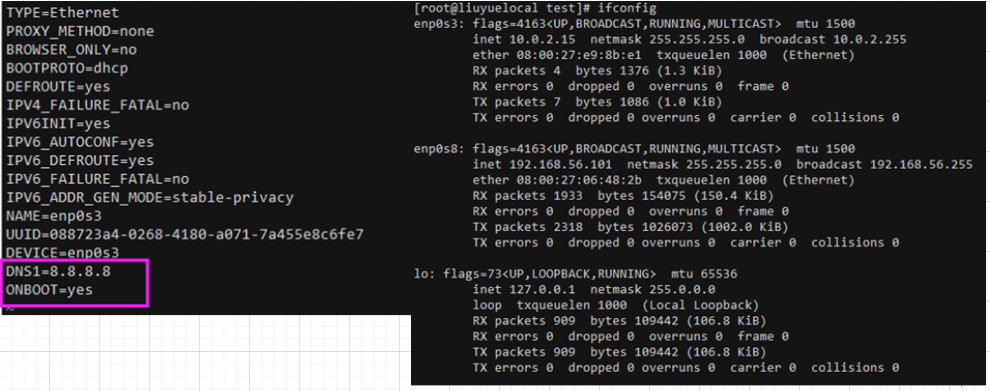

从某个Topic的某个Partition的数据流看 atomic read-process-write pattern(对于使用kafka stream 的应用来说就是 consume-transform-produce):

More formally, if a stream processing application consumes message A and produces message B such that B = F(A), then exactly once processing means that A is considered consumed if and only if B is successfully produced, and vice versa.

message A will be considered consumed from topic-partition tp0 only when its offset X is marked as consumed. Marking an offset as consumed is called committing an offset. In Kafka, we record offset commits by writing to an internal Kafka topic called the offsets topic. A message is considered consumed only when its offset is committed to the offsets topic

Thus since an offset commit is just another write to a Kafka topic, and since a message is considered consumed only when its offset is committed, atomic writes across multiple topics and partitions also enable atomic read-process-write cycles: the commit of the offset X to the offsets topic and the write of message B to tp1 will be part of a single transaction, and hence atomic.

设计:

每个application订阅一个主题,创建一个KafkaConsumer,发生rebalance之后根据分配的partition,每个partition都创建一个Transactional KafkaProducer

1.上游KafkaProducer:

保证不会重复发送:

- retry 重发场景:The producer.send() could result in duplicate writes of message B due to internal retries.

解决方法:This is addressed by the idempotent producer: enable.idempotence=true

- Reprocessing may happen if the stream processing application crashes after writing B but before marking A as consumed. Thus when it resumes, it will consume A again and write B again, causing a duplicate.

解决方法:使用transaction,将 write B和mark A consumed(self main consumer offset on kafka topic partition)作为一个transaction

- 挂起后又恢复场景,applications will crash or—worse!—temporarily lose connectivity to the rest of the system. Typically, new instances are automatically started to replace the ones which were deemed lost. Through this process, we may have multiple instances processing the same input topics and writing to the same output topics, causing duplicate outputs and violating the exactly once processing semantics. We call this the problem of “zombie instances.”

比如Producer发送Kafka消息(commitTransaction之前)突然挂起,然后transaction.id被新起的Producer占用,当之前的Producer又恢复的时候再commitTransaction会被Fence屏蔽掉,并且KafkaConsumer的read_committed隔离级别也可以保证不读取这些尚未提交的事务消息(The Kafka consumer will only deliver transactional messages to the application if the transaction was actually committed. Put another way, the consumer will not deliver transactional messages which are part of an open transaction, and nor will it deliver messages which are part of an aborted transaction.

when using a Kafka consumer to consume messages from a topic, an application will not know whether these messages were written as part of a transaction, and so they do not know when transactions start or end.

In short: Kafka guarantees that a consumer will eventually deliver only non-transactional messages or committed transactional messages. It will withhold messages from open transactions and filter out messages from aborted transactions.)

解决方法:通过 transaction.id 进行 zombie Fence

4)consumer group中多个服务发生rebalance后重启的场景:服务A处理Paritition 0和1,然后服务B进入引起rebalance,分配结果为B接管Partition 0,当B创建KafkaProducer处理partition 0时,首先要定位到partition 0之前被处理到哪里了,这种场景仍然是先使用 Transaction.id进行zombie fence,以防服务A的处理Partition 0的kafkaproducer还在工作(比如使用了disruptor有ringbuffer缓存,即使没有用disruptor这种东西,由于A和B是两个独立的进程,B也无法保证A的kafka producer没有继续再处理某条消息,所以先小人后君子),然后才读取offset,定位到之前A在Partition 0成功提交的最后一条消息(换句话就是被zombie fence之前成功的最后一条消息)

保证不漏发:

- KafkaProducer需要使用幂等性,并且千万不要设置 ETRIES_CONFIG = "retries",使用默认的Integer.MAX_VALUE

- 首先上游不要漏掉处理某个信息,此时如果上游的角色就是kafkaConsumer,问题进一步分解为:

- 上游不要漏掉某条kafka消息,这个完全由kafka保证

- 收到kafka消息后,处理到发送给下游的过程不要“漏”,即这个过程要保证鲁棒性和一致性

- 其次处理完后调用kafkaProducer接口send给下游,这个调用完全是kafka来保证不会漏

2.下游KafkaConsumer:

保证不要重复处理:

默认这个是由kafka offset来控制,_consumer_offset,但是在某些场景下使用 _consumer_offset 会有问题:

比如KafkaConsumer处理数据的过程包括作为KafkaProducer继续往下游发送消息,那么可以通过KafkaProducer的transaction处理,同时发送一个增量快照保存起当前的offset,这样就可以脱离开系统默认的__consumer_offset

另外一个比较隐含的场景就是上游出了问题,参考上游的场景3)和4)

保证不要丢失消息:

1)不要使用 auto commit,因为调用poll之后,消息offset可能已经被自动提交,但是此时如果没有处理完程序崩溃,再启动就会丢失消息;

而是采用 atomic read-process-write模型,将write和Mark read作为一个transaction,这样不会出现前面的: 消息被标记为已读,但是还没处理完程序崩溃,重启之后丢失的问题;

2)一旦producer成功将事务型的消息或非事务型的消息发送给了kafka(成功调用send api或commit transaction),接下来就是kafka的集群来保证消息不会丢掉了(比如关于replication high watermark)

如图中所示,solution A不完美,因为解决不了服务A因为网络跟kafka集群断开又恢复的场景下有可能在极短的时间窗口发生的重复消费问题,solution B是最完美的设计,充分利用了kafka的exactly once能力

详细解读参考:

- Consumer Indepth 跳转至 深入Kafka Consumer消费者解析

- Producer Indepth 跳转至 深入Kafka Producer生产者解析

# 4.2 Exactly-Once-Stream-Processsing

or stream processing applications built using Kafka’s Streams API, we leverage the fact that the source of truth for the state store and the input offsets are Kafka topics. Hence we can transparently fold this data into transactions that atomically write to multiple partitions, and thus provide the exactly-once guarantee for streams across the read-process-write operations.

processing.guarantee=exactly_once

Note that exactly-once semantics is guaranteed within the scope of Kafka Streams’ internal processing only; for example, if the event streaming app written in Streams makes an RPC call to update some remote stores, or if it uses a customized client to directly read or write to a Kafka topic, the resulting side effects would not be guaranteed exactly once.

详细解读参考: 深入Kafka Stream

# 5. 集成开发

# apache-kafka

org.apache.kafka.kafka-client

https://docs.confluent.io/clients-kafka-java/current/overview.html

https://www.baeldung.com/kafka-exactly-once

https://kafka.apache.org/20/javadoc/org/apache/kafka/clients/producer/KafkaProducer.html

https://dzone.com/articles/kafka-producer-and-consumer-example

默认配置:

https://kafka.apache.org/documentation.html#configuration https://kafka.apache.org/22/javadoc/org/apache/kafka/clients/consumer/ConsumerConfig.html

# springboot spring-kafka

https://www.baeldung.com/spring-kafka

org.springframework.kafka包含:

- spring-context

- spring-messaging

- spring-tx

- spring-retry

- kafka-clients

# 6. Diving into Kafka 设计内幕

理想情况下,我们使用类似kafka这样成熟的产品,一般只需要将kafka当做黑盒,然后通过kafka开放的API来达到跟kafka交互的exactly-once,但实际上为了更好的理解或者有时候kafka本身的很多细节也会影响到使用性能甚至是可用性,我们常常还是要打开黑盒,进入其代码内部或者阅读其代码设计文档

# Sourcecodes

Kafka Client sourcecode in Java (opens new window)

kafka Server sourcecode in Scala (opens new window)

KAFKA PROTOCOL GUIDE https://kafka.apache.org/protocol#The_Messages_WriteTxnMarkers Difference between kafka batch and kafka request https://stackoverflow.com/questions/74088393/difference-between-kafka-batch-and-kafka-request

# leader epoch & high watermark

Kafka is a highly available, persistent, durable system where every message written to a partition is persisted and replicated some number of times (we will call it n). As a result, Kafka can tolerate n-1 broker failures, meaning that a partition is available as long as there is at least one broker available. Kafka’s replication protocol guarantees that once a message has been written successfully to the leader replica, it will be replicated to all available replicas.

https://rongxinblog.wordpress.com/2016/07/29/kafka-high-watermark/

https://cwiki.apache.org/confluence/display/KAFKA/KIP-101+-+Alter+Replication+Protocol+to+use+Leader+Epoch+rather+than+High+Watermark+for+Truncation Kafka数据丢失及最新改进策略 http://lday.me/2017/10/08/0014_kafka_data_loss_and_new_mechanism/ kafka ISR设计及水印与leader epoch副本同步机制深入剖析-kafka 商业环境实战 https://juejin.im/post/5bf6b0acf265da612d18e931 leader epoch与watermark https://www.cnblogs.com/huxi2b/p/7453543.html High watermark If you want to improve the reliability of the data, set the request.required.acks = -1, but also min.insync.replicas this parameter (which can be set in the broker or topic level) to achieve maximum effectiveness. https://medium.com/@mukeshkumar_46704/in-depth-kafka-message-queue-principles-of-high-reliability-42e464e66172

# 副本 replication 等细节

https://www.cnblogs.com/luozhiyun/p/12079527.html

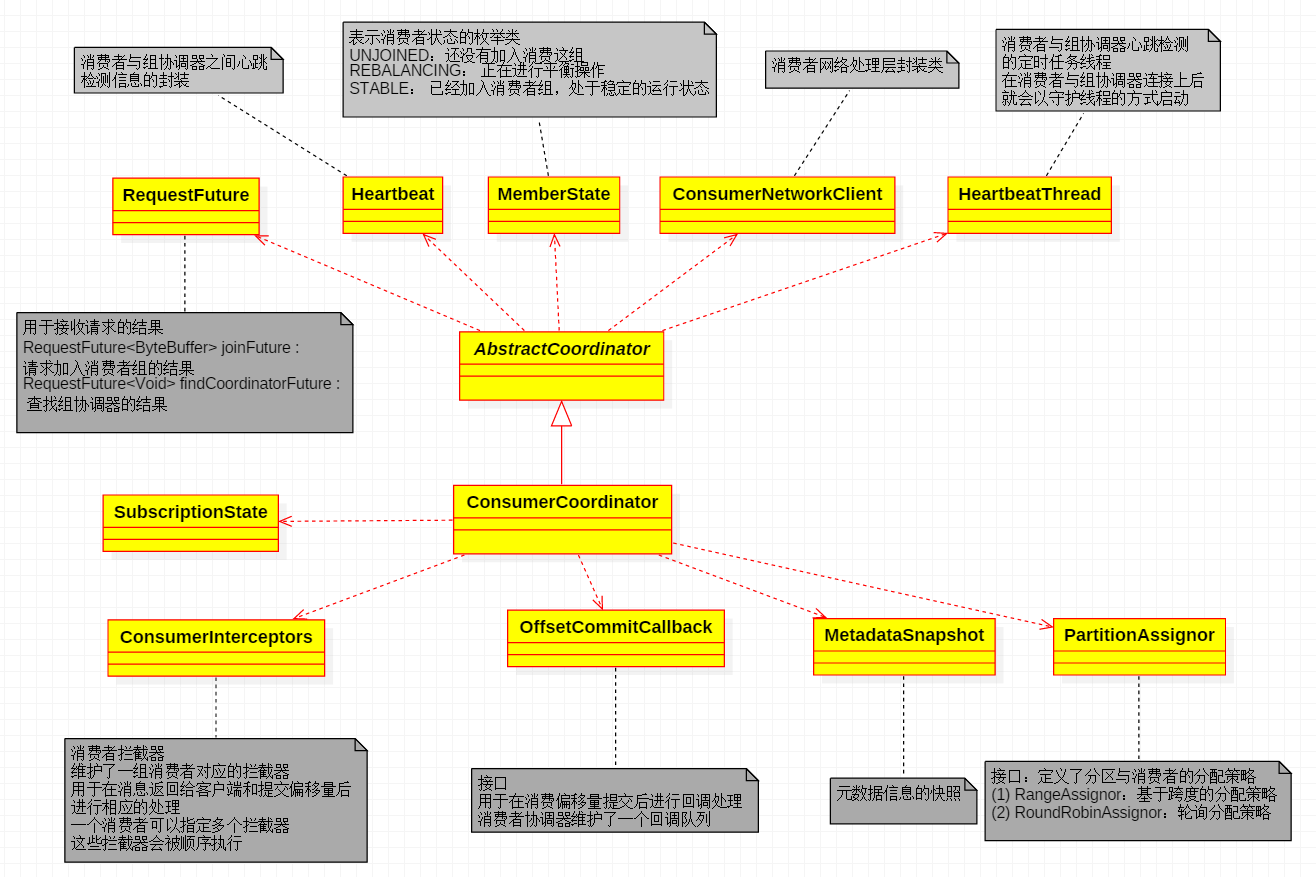

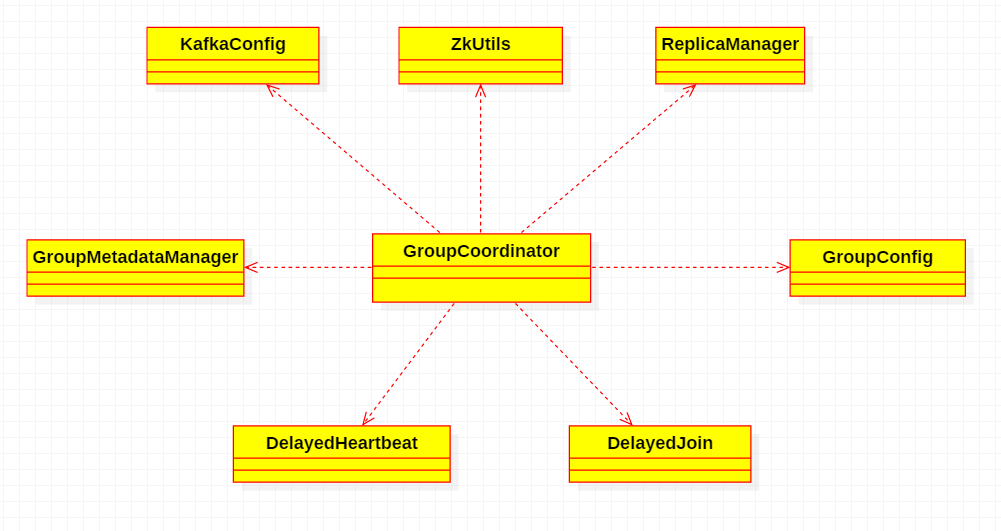

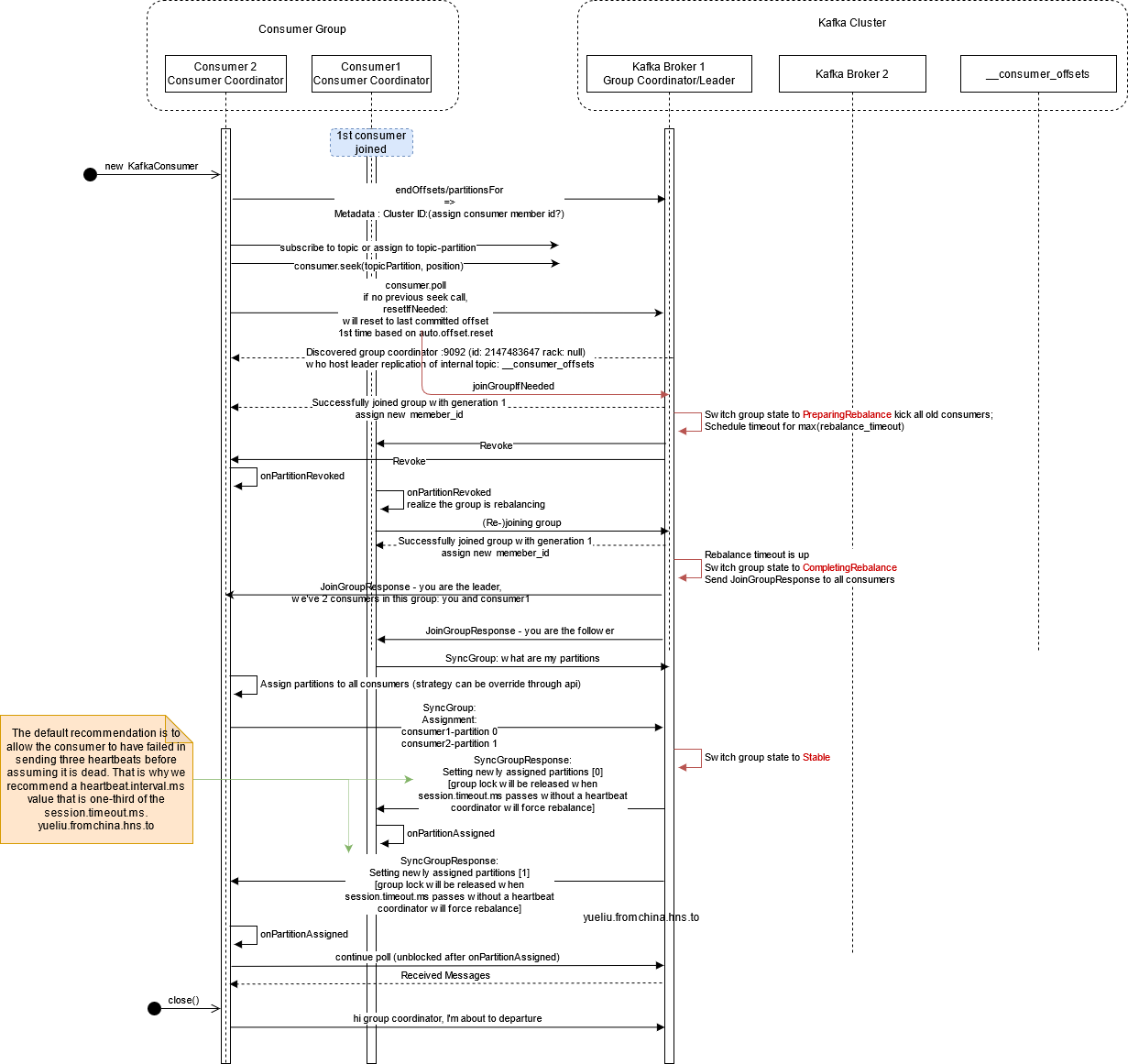

# Consumer coordinator & Group coordinator & Rebalance

https://matt33.com/2017/10/22/consumer-join-group/

https://cloud.tencent.com/developer/news/19958

While the old consumer depended on Zookeeper for group management, the new consumer uses a group coordination protocol built into Kafka itself. For each group, one of the brokers is selected as the group coordinator. The coordinator is responsible for managing the state of the group. Its main job is to mediate partition assignment when new members arrive, old members depart, and when topic metadata changes. The act of reassigning partitions is known as rebalancing the group.

https://www.confluent.io/blog/tutorial-getting-started-with-the-new-apache-kafka-0-9-consumer-client/

keyword:

GroupCoordinator 服务端 borker(Kafka 会依据请求的 group 的 ID 查找对应 offset topic [consumer_offsets?]分区 leader 副本所在的 broker 节点:

每个 Group 都会选择一个 Coordinator 来完成自己组内各 Partition 的 Offset 信息,选择的规则如下: 1. 计算 Group 对应在 __consumer_offsets

上的Partition 2. 根据对应的Partition寻找该Partition的leader所对应的Broker,该Broker上的Group Coordinator即就是该Group的Coordinator)ConsumerCoordinator

Consumer leader/follower 第一个申请加入group的consumer作为leader,在服务端确定好分区分配策略之后,具体执行分区分配的工作则交由 leader 消费者负责,并在完成分区分配之后将分配结果反馈给服务端